What you will notice most is that DeepSeek is limited by not containing all of the extras you get withChatGPT. The use of DeepSeek Coder models is subject to the Model License. Superior Model Performance: State-of-the-art efficiency among publicly out there code models on HumanEval, MultiPL-E, MBPP, DS-1000, and APPS benchmarks. Step 1: Collect code information from GitHub and apply the identical filtering guidelines as StarCoder Data to filter knowledge. Step 1: Initially pre-educated with a dataset consisting of 87% code, 10% code-associated language (Github Markdown and StackExchange), and 3% non-code-associated Chinese language. That is why, as you learn these phrases, a number of dangerous actors will probably be testing and deploying R1 (having downloaded it without cost from DeepSeek’s GitHub repro). For coding capabilities, DeepSeek Coder achieves state-of-the-artwork efficiency among open-supply code models on a number of programming languages and numerous benchmarks. In summary, as of 20 January 2025, cybersecurity professionals now reside in a world the place a nasty actor can deploy the world’s high 3.7% of competitive coders, for less than the cost of electricity, to carry out giant scale perpetual cyber-assaults across a number of targets concurrently. Fortunately, the highest mannequin developers (together with OpenAI and Google) are already concerned in cybersecurity initiatives where non-guard-railed cases of their slicing-edge fashions are getting used to push the frontier of offensive & predictive safety.

What you will notice most is that DeepSeek is limited by not containing all of the extras you get withChatGPT. The use of DeepSeek Coder models is subject to the Model License. Superior Model Performance: State-of-the-art efficiency among publicly out there code models on HumanEval, MultiPL-E, MBPP, DS-1000, and APPS benchmarks. Step 1: Collect code information from GitHub and apply the identical filtering guidelines as StarCoder Data to filter knowledge. Step 1: Initially pre-educated with a dataset consisting of 87% code, 10% code-associated language (Github Markdown and StackExchange), and 3% non-code-associated Chinese language. That is why, as you learn these phrases, a number of dangerous actors will probably be testing and deploying R1 (having downloaded it without cost from DeepSeek’s GitHub repro). For coding capabilities, DeepSeek Coder achieves state-of-the-artwork efficiency among open-supply code models on a number of programming languages and numerous benchmarks. In summary, as of 20 January 2025, cybersecurity professionals now reside in a world the place a nasty actor can deploy the world’s high 3.7% of competitive coders, for less than the cost of electricity, to carry out giant scale perpetual cyber-assaults across a number of targets concurrently. Fortunately, the highest mannequin developers (together with OpenAI and Google) are already concerned in cybersecurity initiatives where non-guard-railed cases of their slicing-edge fashions are getting used to push the frontier of offensive & predictive safety.

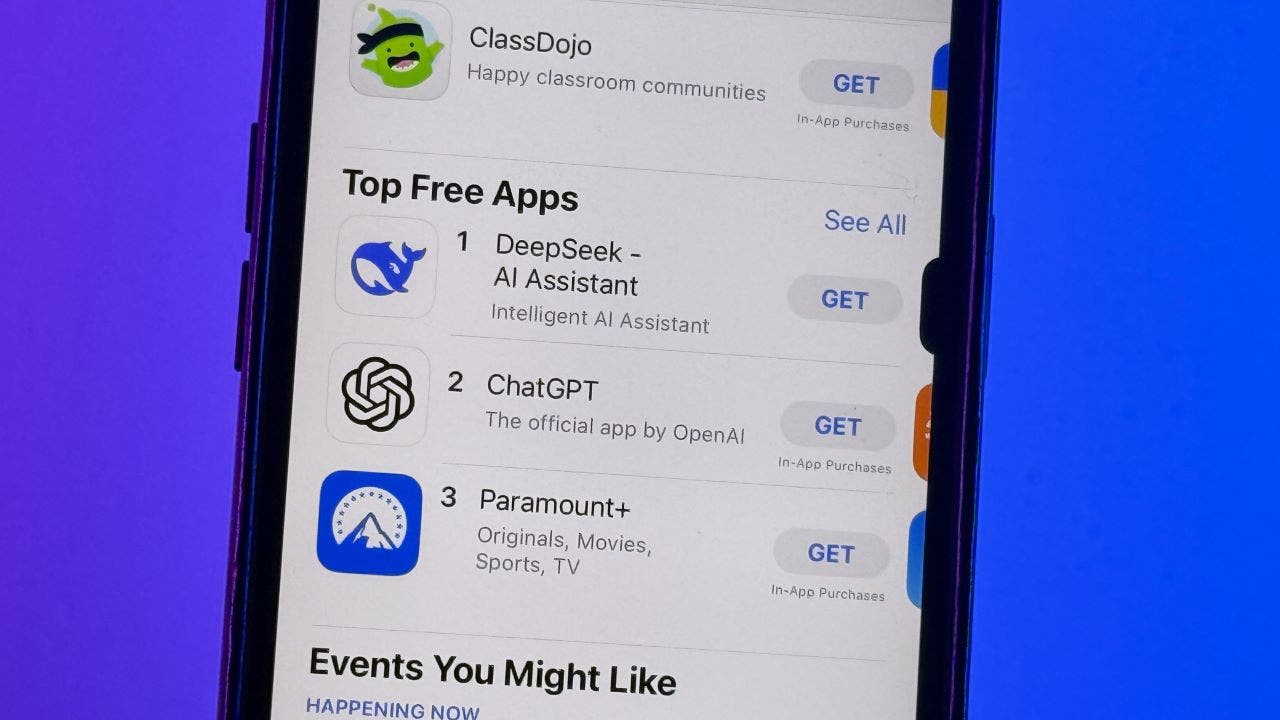

1 on the Apple Store and persistently being reviewed as a "game-changer". Impressive though R1 is, for the time being no less than, bad actors don’t have access to probably the most highly effective frontier models. Access any net application in a facet panel with out leaving your editor. Through in depth mapping of open, darknet, and deep web sources, DeepSeek zooms in to trace their net presence and determine behavioral pink flags, reveal criminal tendencies and activities, or every other conduct not in alignment with the organization’s values. We are effectively witnessing the democratisation of cybercrime; a world the place smaller criminal groups can run refined giant-scale operations previously restricted to groups able to fund teams with this stage of advanced technical experience. DeepSeek Chat can optimize your content material's structure to reinforce readability and ensure a easy stream of ideas. Step 4: Further filtering out low-quality code, equivalent to codes with syntax errors or poor readability. The total variety of plies played by deepseek-reasoner out of 58 games is 482.0. Around 12 % were unlawful. 2022. In line with Gregory Allen, director of the Wadhwani AI Center at the center for Strategic and International Studies (CSIS), the full training value might be "much larger," as the disclosed quantity solely coated the price of the final and successful training run, but not the prior research and experimentation.

1 on the Apple Store and persistently being reviewed as a "game-changer". Impressive though R1 is, for the time being no less than, bad actors don’t have access to probably the most highly effective frontier models. Access any net application in a facet panel with out leaving your editor. Through in depth mapping of open, darknet, and deep web sources, DeepSeek zooms in to trace their net presence and determine behavioral pink flags, reveal criminal tendencies and activities, or every other conduct not in alignment with the organization’s values. We are effectively witnessing the democratisation of cybercrime; a world the place smaller criminal groups can run refined giant-scale operations previously restricted to groups able to fund teams with this stage of advanced technical experience. DeepSeek Chat can optimize your content material's structure to reinforce readability and ensure a easy stream of ideas. Step 4: Further filtering out low-quality code, equivalent to codes with syntax errors or poor readability. The total variety of plies played by deepseek-reasoner out of 58 games is 482.0. Around 12 % were unlawful. 2022. In line with Gregory Allen, director of the Wadhwani AI Center at the center for Strategic and International Studies (CSIS), the full training value might be "much larger," as the disclosed quantity solely coated the price of the final and successful training run, but not the prior research and experimentation.

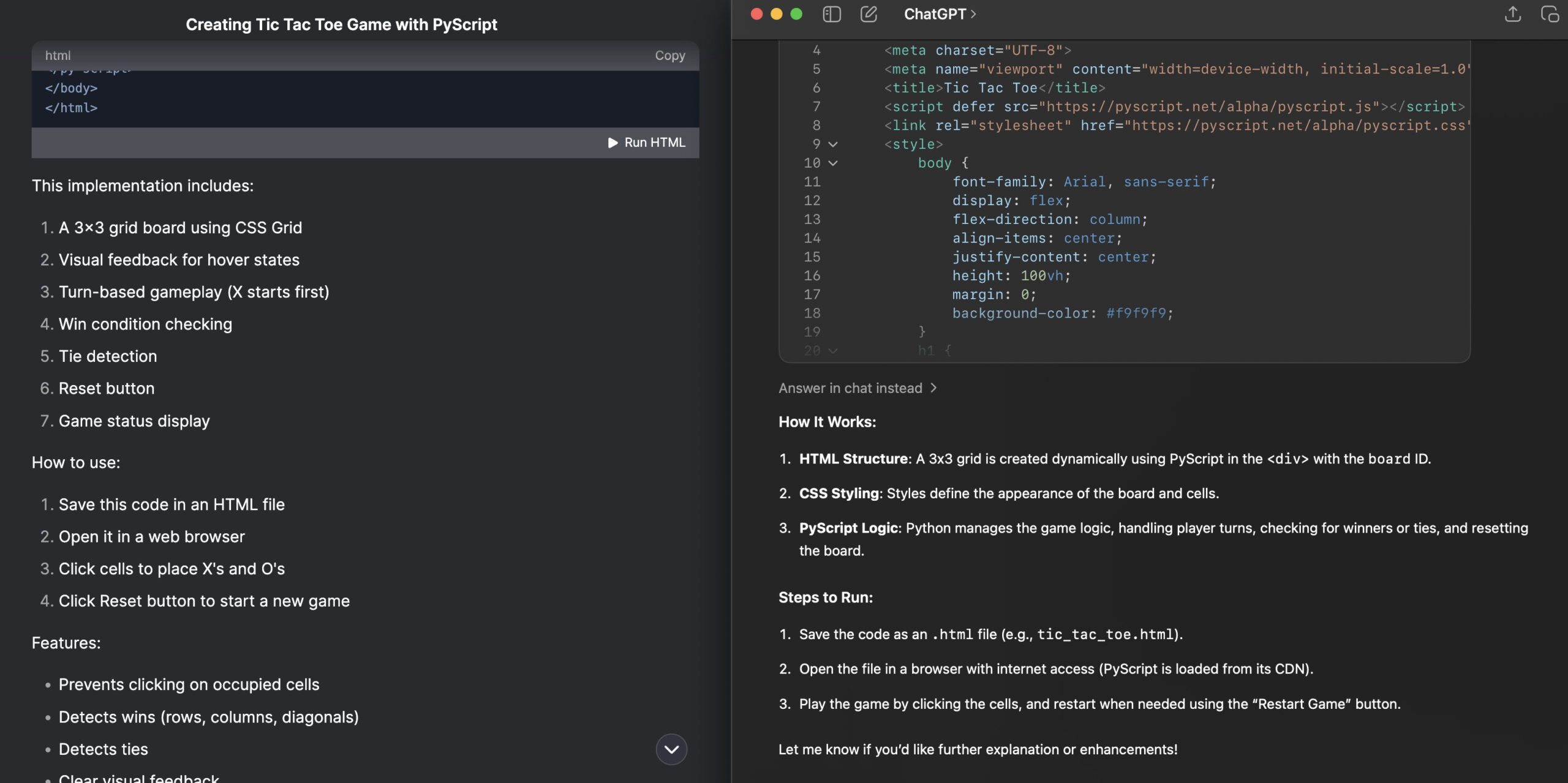

He also mentioned the $5 million cost estimate may precisely characterize what DeepSeek paid to rent certain infrastructure for training its fashions, but excludes the prior research, experiments, algorithms, data and prices related to constructing out its merchandise. 4. Fill out a short kind along with your information. Please pull the latest version and try out. What makes DeepSeek significant is the way in which it could possibly cause and learn from other fashions, together with the truth that the AI neighborhood can see what’s happening behind the scenes. Currently, there isn't a direct way to convert the tokenizer right into a SentencePiece tokenizer. To place that in perspective, this implies there are only 175 human competitive coders on the planet who can outperform o3. For US policymakers, it needs to be a wakeup name that there needs to be a better understanding of the adjustments in China’s innovation atmosphere and the way this fuels their national strategies. Many people evaluate it to Deepseek R1, and a few say it’s even better.

As an illustration, OpenAI’s already skilled and examined, but yet-to-be publicly released, o3 reasoning mannequin scored higher than 99.95% of coders in Codeforces’ all-time rankings. Could You Provide the tokenizer.model File for Model Quantization? Step 2: Parsing the dependencies of information within the same repository to rearrange the file positions based on their dependencies. Models are pre-skilled using 1.8T tokens and a 4K window size on this step. Step 2: Further Pre-coaching using an extended 16K window dimension on a further 200B tokens, resulting in foundational models (DeepSeek-Coder-Base). Each mannequin is pre-skilled on undertaking-level code corpus by employing a window dimension of 16K and an additional fill-in-the-clean job, to support challenge-level code completion and infilling. This modification prompts the model to recognize the tip of a sequence otherwise, thereby facilitating code completion duties. This model is rock solid. The evolution of AI was starting to feel a bit stale the place we were seeing each new model mixing into the identical monotonous, predictable mold. However, it has the identical flexibility as different fashions, and you'll ask it to clarify issues more broadly or adapt them to your wants. After information preparation, you should utilize the sample shell script to finetune deepseek-ai/deepseek-coder-6.7b-instruct.