A year that started with OpenAI dominance is now ending with Anthropic’s Claude being my used LLM and the introduction of a number of labs which are all attempting to push the frontier from xAI to Chinese labs like DeepSeek and Qwen. It excels in areas that are traditionally difficult for AI, like advanced mathematics and code era. OpenAI's ChatGPT is maybe the perfect-recognized utility for conversational AI, content material era, and programming assist. ChatGPT is one in every of the most well-liked AI chatbots globally, developed by OpenAI. One among the most recent names to spark intense buzz is Deepseek AI. But why settle for generic options when you've gotten DeepSeek up your sleeve, promising effectivity, price-effectiveness, and actionable insights all in one sleek package? Start with easy requests and steadily attempt more superior features. For simple test instances, it works fairly well, but just barely. The truth that this works at all is surprising and raises questions on the significance of position information throughout long sequences.

A year that started with OpenAI dominance is now ending with Anthropic’s Claude being my used LLM and the introduction of a number of labs which are all attempting to push the frontier from xAI to Chinese labs like DeepSeek and Qwen. It excels in areas that are traditionally difficult for AI, like advanced mathematics and code era. OpenAI's ChatGPT is maybe the perfect-recognized utility for conversational AI, content material era, and programming assist. ChatGPT is one in every of the most well-liked AI chatbots globally, developed by OpenAI. One among the most recent names to spark intense buzz is Deepseek AI. But why settle for generic options when you've gotten DeepSeek up your sleeve, promising effectivity, price-effectiveness, and actionable insights all in one sleek package? Start with easy requests and steadily attempt more superior features. For simple test instances, it works fairly well, but just barely. The truth that this works at all is surprising and raises questions on the significance of position information throughout long sequences.

Not solely that, it should robotically bold the most important data factors, permitting users to get key info at a look, as shown under. This feature permits customers to find relevant data shortly by analyzing their queries and offering autocomplete options. Ahead of today’s announcement, Nubia had already begun rolling out a beta replace to Z70 Ultra users. OpenAI recently rolled out its Operator agent, which might successfully use a pc on your behalf - in case you pay $200 for the professional subscription. Event import, but didn’t use it later. This strategy is designed to maximise the use of accessible compute assets, resulting in optimum efficiency and vitality effectivity. For the extra technically inclined, this chat-time efficiency is made possible primarily by DeepSeek's "mixture of experts" structure, which primarily means that it comprises a number of specialised models, moderately than a single monolith. POSTSUPERscript. During training, every single sequence is packed from a number of samples. I have 2 causes for this hypothesis. Deepseek Online chat V3 is a big deal for numerous reasons. DeepSeek presents pricing based on the variety of tokens processed. Meanwhile it processes text at 60 tokens per second, twice as quick as GPT-4o.

Not solely that, it should robotically bold the most important data factors, permitting users to get key info at a look, as shown under. This feature permits customers to find relevant data shortly by analyzing their queries and offering autocomplete options. Ahead of today’s announcement, Nubia had already begun rolling out a beta replace to Z70 Ultra users. OpenAI recently rolled out its Operator agent, which might successfully use a pc on your behalf - in case you pay $200 for the professional subscription. Event import, but didn’t use it later. This strategy is designed to maximise the use of accessible compute assets, resulting in optimum efficiency and vitality effectivity. For the extra technically inclined, this chat-time efficiency is made possible primarily by DeepSeek's "mixture of experts" structure, which primarily means that it comprises a number of specialised models, moderately than a single monolith. POSTSUPERscript. During training, every single sequence is packed from a number of samples. I have 2 causes for this hypothesis. Deepseek Online chat V3 is a big deal for numerous reasons. DeepSeek presents pricing based on the variety of tokens processed. Meanwhile it processes text at 60 tokens per second, twice as quick as GPT-4o.

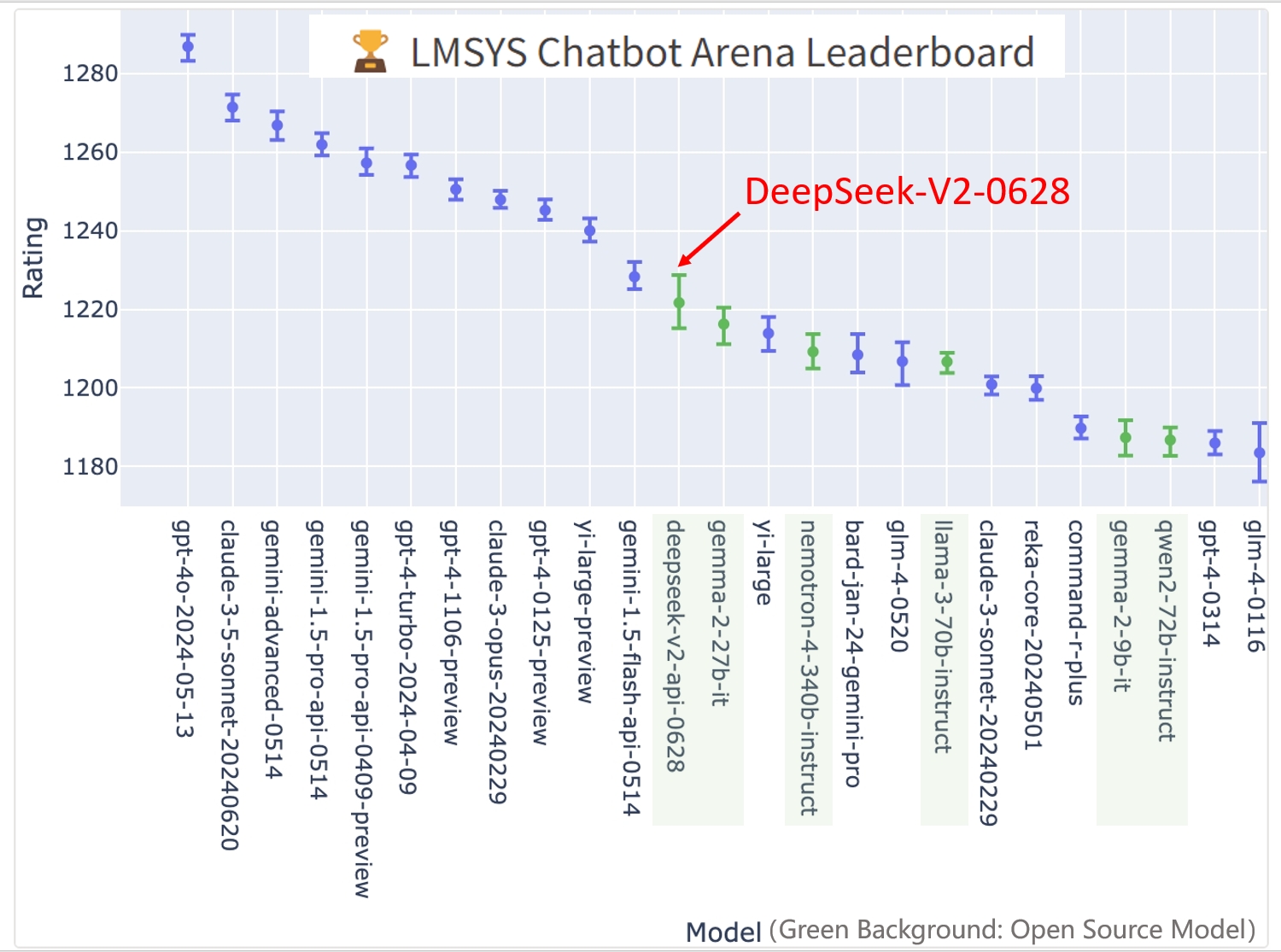

However, this trick might introduce the token boundary bias (Lundberg, 2023) when the model processes multi-line prompts with out terminal line breaks, notably for few-shot analysis prompts. I suppose @oga needs to make use of the official Deepseek API service instead of deploying an open-supply mannequin on their very own. The objective of this post is to deep-dive into LLMs that are specialized in code generation duties and see if we can use them to write down code. You can instantly use Huggingface's Transformers for model inference. Experience the facility of Janus Pro 7B mannequin with an intuitive interface. The model goes head-to-head with and infrequently outperforms fashions like GPT-4o and Claude-3.5-Sonnet in numerous benchmarks. On FRAMES, a benchmark requiring query-answering over 100k token contexts, DeepSeek-V3 carefully trails GPT-4o while outperforming all other models by a big margin. Now we want VSCode to call into these fashions and produce code. I created a VSCode plugin that implements these methods, and is able to interact with Ollama running regionally.

The plugin not only pulls the current file, but also hundreds all of the presently open recordsdata in Vscode into the LLM context. The current "best" open-weights models are the Llama three sequence of models and Meta seems to have gone all-in to prepare the absolute best vanilla Dense transformer. Large Language Models are undoubtedly the largest half of the current AI wave and is at present the world the place most analysis and investment is going in the direction of. So whereas it’s been dangerous information for the massive boys, it is perhaps good news for small AI startups, particularly since its fashions are open supply. At solely $5.5 million to train, it’s a fraction of the cost of fashions from OpenAI, Google, or Anthropic which are often within the a whole lot of thousands and thousands. The 33b models can do fairly a couple of things accurately. Second, when DeepSeek developed MLA, they needed to add other things (for eg having a bizarre concatenation of positional encodings and no positional encodings) past just projecting the keys and values because of RoPE.

Here's more in regards to Deepseek AI Online chat review our web-page.