In latest weeks, Chinese synthetic intelligence (AI) startup DeepSeek has released a set of open-source massive language fashions (LLMs) that it claims had been educated utilizing only a fraction of the computing power wanted to train a few of the highest U.S.-made LLMs. The startup employed younger engineers, not skilled business arms, and gave them freedom and assets to do "mad science" aimed toward long-time period discovery for its own sake, not product development for next quarter. Did U.S. hyperscalers like OpenAI end up spending billions constructing competitive moats or a Maginot line that merely gave the illusion of safety? I gave the opening keynote on the AI Engineer World’s Fair yesterday. These are all essential questions, and the answers will take time. This transparent reasoning at the time a question is asked of a language mannequin is known as interference-time explainability. Many reasoning steps could also be required to attach the present token to the next, making it challenging for the mannequin to study successfully from next-token prediction.

A particularly compelling facet of DeepSeek R1 is its obvious transparency in reasoning when responding to complex queries. Scalability: The paper focuses on comparatively small-scale mathematical issues, and it is unclear how the system would scale to larger, more complex theorems or proofs. For academia, the availability of more robust open-weight fashions is a boon as a result of it allows for reproducibility, privateness, and allows the research of the internals of superior AI. With the fashions freely out there for modification and deployment, the concept mannequin developers can and can successfully handle the dangers posed by their fashions could develop into increasingly unrealistic. But, regardless, the release of DeepSeek highlights the dangers and rewards of this technology’s outsized potential to affect our expertise of reality specifically - what we even come to think of as reality. I feel quite a lot of it simply stems from schooling working with the research neighborhood to ensure they're conscious of the dangers, to make sure that analysis integrity is basically necessary. DeepSeek has been publicly releasing open fashions and detailed technical analysis papers for over a yr. The observe of sharing innovations by technical reports and open-source code continues the tradition of open analysis that has been important to driving computing forward for the past 40 years.

A particularly compelling facet of DeepSeek R1 is its obvious transparency in reasoning when responding to complex queries. Scalability: The paper focuses on comparatively small-scale mathematical issues, and it is unclear how the system would scale to larger, more complex theorems or proofs. For academia, the availability of more robust open-weight fashions is a boon as a result of it allows for reproducibility, privateness, and allows the research of the internals of superior AI. With the fashions freely out there for modification and deployment, the concept mannequin developers can and can successfully handle the dangers posed by their fashions could develop into increasingly unrealistic. But, regardless, the release of DeepSeek highlights the dangers and rewards of this technology’s outsized potential to affect our expertise of reality specifically - what we even come to think of as reality. I feel quite a lot of it simply stems from schooling working with the research neighborhood to ensure they're conscious of the dangers, to make sure that analysis integrity is basically necessary. DeepSeek has been publicly releasing open fashions and detailed technical analysis papers for over a yr. The observe of sharing innovations by technical reports and open-source code continues the tradition of open analysis that has been important to driving computing forward for the past 40 years.

He also doubled down on AI, organising a separate company-Hangzhou High-Flyer AI-to analysis AI algorithms and their purposes and expanded High-Flyer overseas, establishing a fund registered in Hong Kong. As a research area, we must always welcome this sort of work. It'll help make everyone’s work higher. The funding will help the company additional develop its chips as properly as the associated software stack. "If we're to counter America’s AI tech dominance, DeepSeek will definitely be a key member of China’s ‘Avengers team,’" he mentioned in a video on Weibo. The strongest behavioral indication that China might be insincere comes from China’s April 2018 United Nations position paper,23 through which China’s government supported a worldwide ban on "lethal autonomous weapons" but used such a bizarrely narrow definition of lethal autonomous weapons that such a ban would appear to be both pointless and ineffective. The Chinese government has strategically encouraged open-supply improvement while maintaining tight control over AI’s domestic applications, significantly in surveillance and censorship. While many U.S. corporations have leaned toward proprietary fashions and questions stay, particularly round knowledge privateness and safety, DeepSeek’s open approach fosters broader engagement benefiting the worldwide AI community, fostering iteration, progress, and innovation.

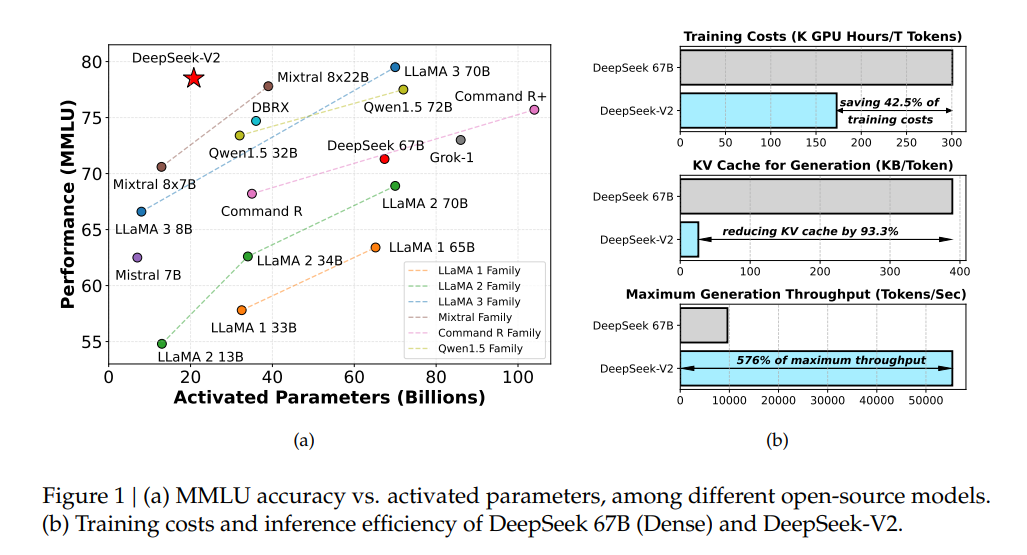

Some corporations create these fashions, whereas others use them for specific functions. It’s a sad state of affairs for what has lengthy been an open country advancing open science and engineering that the best approach to find out about the details of trendy LLM design and engineering is at present to read the thorough technical reports of Chinese corporations. Additionally, medical insurance firms typically tailor insurance plans based mostly on patients’ needs and dangers, not simply their capacity to pay. Major tech gamers are projected to take a position more than $1 trillion in AI infrastructure by 2029, and the DeepSeek improvement most likely won’t change their plans all that much. They're bringing the prices of AI down. DeepSeek Ai Chat has proven many useful optimizations that cut back the costs when it comes to computation on each of these sides of the AI sustainability equation. Stanford has at the moment adapted, via Microsoft’s Azure program, a "safer" version of DeepSeek with which to experiment and warns the group not to use the industrial versions because of security and safety issues.

Some corporations create these fashions, whereas others use them for specific functions. It’s a sad state of affairs for what has lengthy been an open country advancing open science and engineering that the best approach to find out about the details of trendy LLM design and engineering is at present to read the thorough technical reports of Chinese corporations. Additionally, medical insurance firms typically tailor insurance plans based mostly on patients’ needs and dangers, not simply their capacity to pay. Major tech gamers are projected to take a position more than $1 trillion in AI infrastructure by 2029, and the DeepSeek improvement most likely won’t change their plans all that much. They're bringing the prices of AI down. DeepSeek Ai Chat has proven many useful optimizations that cut back the costs when it comes to computation on each of these sides of the AI sustainability equation. Stanford has at the moment adapted, via Microsoft’s Azure program, a "safer" version of DeepSeek with which to experiment and warns the group not to use the industrial versions because of security and safety issues.

If you loved this article and you would love to receive more details with regards to DeepSeek Chat generously visit our web-site.