Jon Turow, an investor at Madrona Ventures in Seattle, says Meta’s pivot from attempting to limit distribution of the primary Llama mannequin to open-sourcing the second could enable a brand new wave of creativity utilizing massive language models. Thanks to the open-source AI community, we now have many LLMs (Large Language Models) that are publicly out there. The error message Permission denied (publickey) signifies that your SSH key isn't appropriately arrange with GitHub, or you wouldn't have access to the repository. Please be certain you've got the right entry rights and the repository exists. Generative AI works by using existing content material as a reference point and learning from it to make its own creations. We are additionally planning to make it simpler for folks to flag dangerous answers, creating issues despatched to the group to investigate. These tools are tailored to particular consumer wants. User Testimonials: Positive suggestions from customers highlights the effectiveness of those instruments. When utilizing these tools alongside ChatGPT, you possibly can leverage ChatGPT for generating concepts, explaining concepts, or validating architectural decisions, whereas using the diagramming instruments to visually symbolize AWS architectures primarily based on the insights offered by ChatGPT.

Jon Turow, an investor at Madrona Ventures in Seattle, says Meta’s pivot from attempting to limit distribution of the primary Llama mannequin to open-sourcing the second could enable a brand new wave of creativity utilizing massive language models. Thanks to the open-source AI community, we now have many LLMs (Large Language Models) that are publicly out there. The error message Permission denied (publickey) signifies that your SSH key isn't appropriately arrange with GitHub, or you wouldn't have access to the repository. Please be certain you've got the right entry rights and the repository exists. Generative AI works by using existing content material as a reference point and learning from it to make its own creations. We are additionally planning to make it simpler for folks to flag dangerous answers, creating issues despatched to the group to investigate. These tools are tailored to particular consumer wants. User Testimonials: Positive suggestions from customers highlights the effectiveness of those instruments. When utilizing these tools alongside ChatGPT, you possibly can leverage ChatGPT for generating concepts, explaining concepts, or validating architectural decisions, whereas using the diagramming instruments to visually symbolize AWS architectures primarily based on the insights offered by ChatGPT.

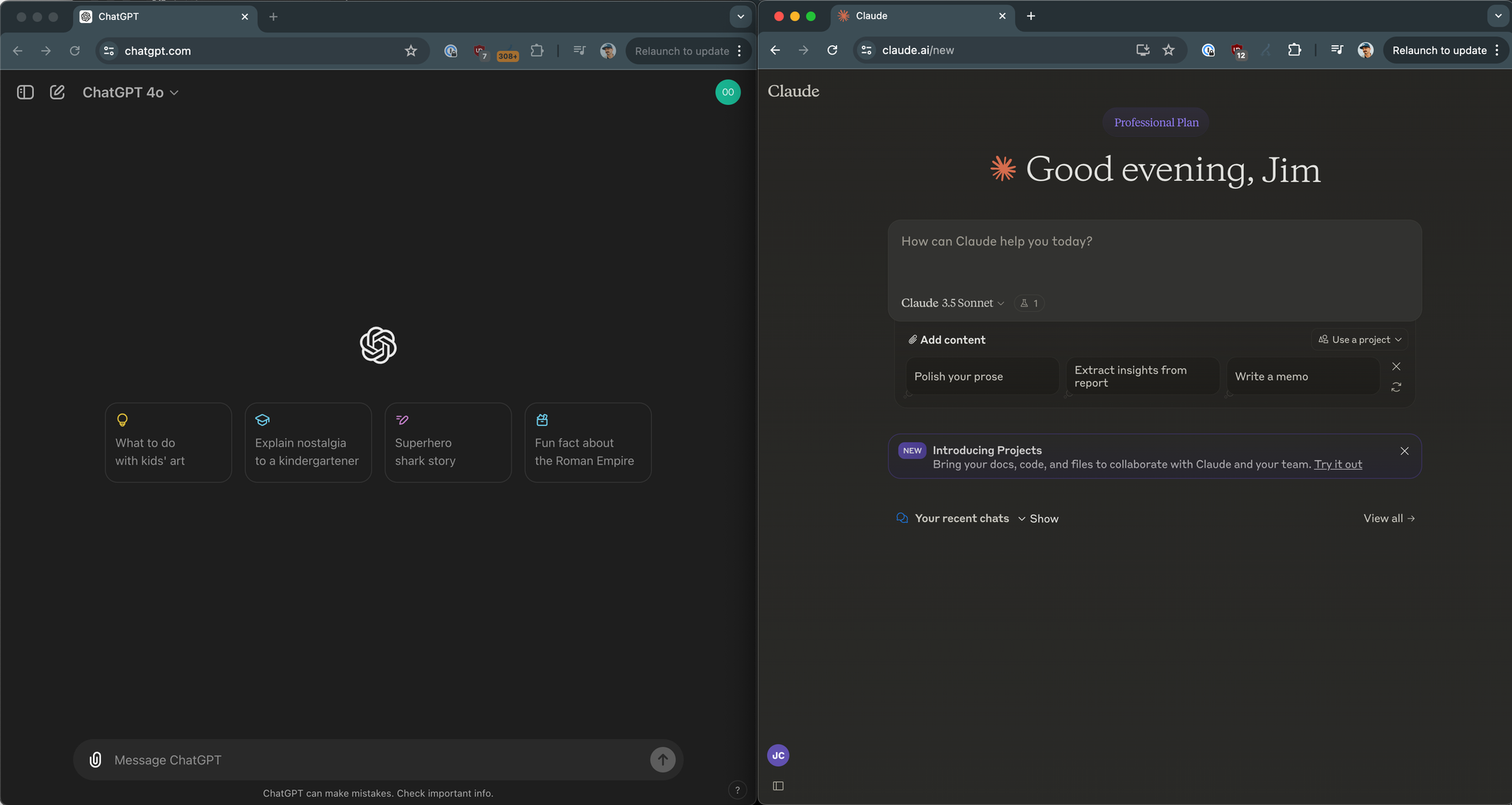

Ollama gives a pleasant CLI chat, whereas LMStudio gives a polished GUI expertise. As you possibly can see, ChatGPT gives introductory textual content and code blocks (with a copy icon for comfort), and concludes the conversation with additional useful data. Code smarter by centralizing your supplies. 1. Download and cache the mannequin on first use so it works completely offline after that. 1. Start a Chat: Begin a new conversation with a model like gpt-4o-mini (examined with this and Claude; seemingly works with others supporting perform calls). You're asking ChatGPT that can assist you write some SQL, however ideally you'd prefer to run it in opposition to an actual database to know if it is appropriate. You can resolve this through the use of sudo to run the command with elevated privileges. This could resolve the issue, allowing you to update the CocoaPods dependencies and construct the Signal-iOS undertaking with none issues. 5. Navigate to the Signal-iOS venture listing and check out operating pod update once more. After putting in the plugin, navigate to the Signal-iOS venture listing and check out operating pod replace once more.

The completion API returns stringified JSON objects that have to be parsed first before we can access the tokens. So, I took this determination because I need a break from work, in the meantime I will even study DSA and different stuff. I imagine Google will credit score web sites for his or her contribution in its ultimate iterations when it launches Bard worldwide. When will the free version of Chat GPT four be obtainable? The chat output is at present shown as plain textual content. We set up Chat Templating. Let's set up your individual native occasion. Let’s attempt setting up a local Ruby setting utilizing RVM and putting in CocoaPods and the plugin in that environment. It might be an issue with the Ruby surroundings or the gem path. I really feel fairly comfy with Ruby now. 3. Building from the ground Up. Now, let's demystify what is really going on by building our own implementation from scratch. What makes this strategy special is that we're working LLM fashions directly in your browser - no backend required. But right now, we're exploring one thing cool - running these fashions straight in your browser. Imagine operating a ChatGPT-like AI right right here in your browser - completely offline. If you are not already running the net app, start it using npm run dev and visit http://localhost:5173/.

If you have any inquiries relating to the place and how to use trychat, you can get hold of us at the internet site.