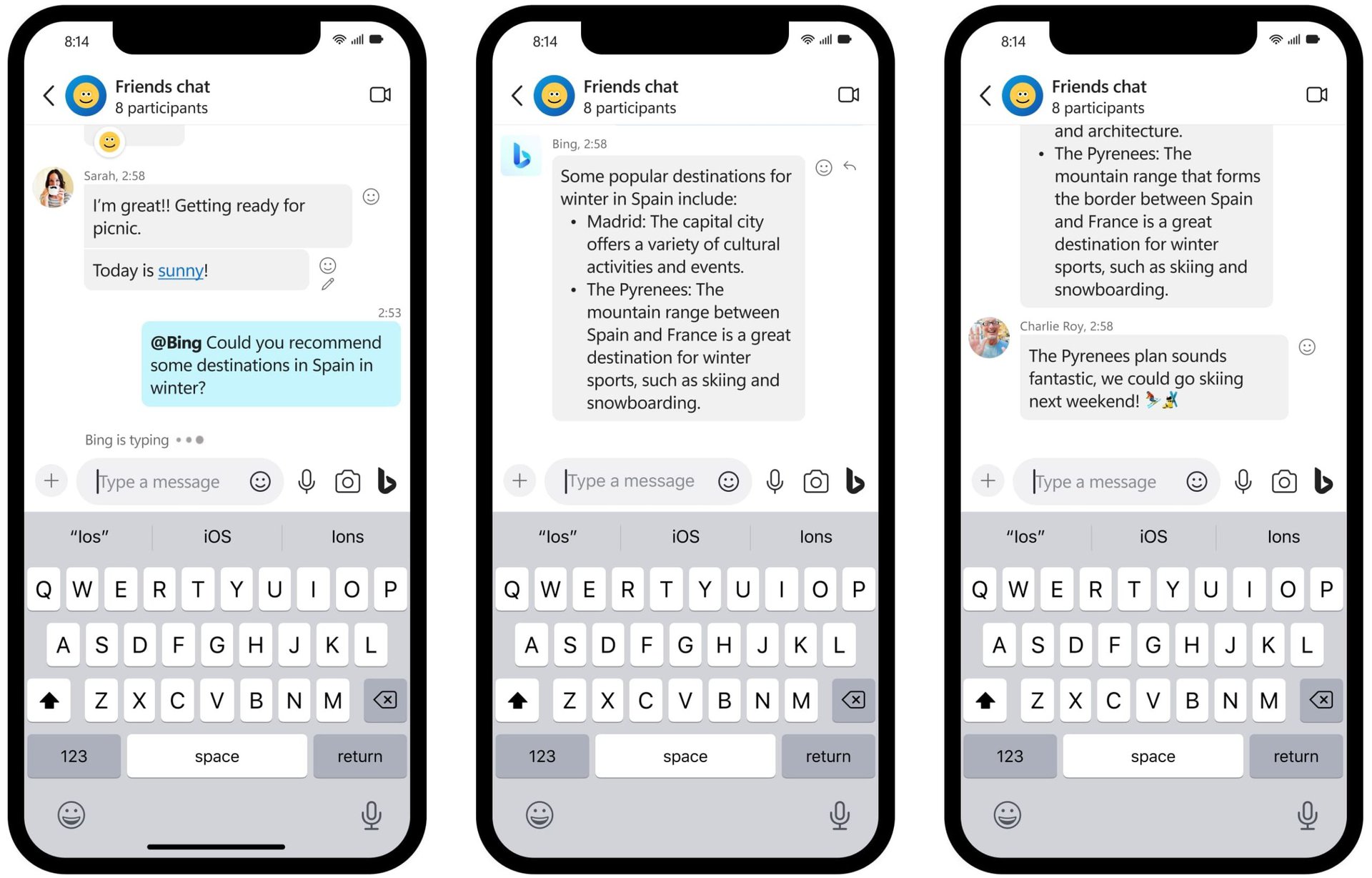

In this case, the useful resource is a chatbot. In this case, viewers are restricted from performing the write action, which means they can not submit prompts to the chatbot. Entertainment and Games − ACT LIKE prompts could be employed in chat-based mostly video games or digital assistants to offer interactive experiences, the place users can interact with virtual characters. This helps streamline value efficiency, data protection, and dynamic actual-time access management, ensuring that your security insurance policies can adapt to evolving business wants. This node is accountable for performing a permission test using Permit.io’s ABAC policies before executing the LLM query. ABAC useful resource units permit for dynamic management over resource access based on attributes like length, query type, or quota. With Permit.io’s Attribute-Based Access Control (ABAC) policies, you possibly can build detailed rules that management who can use which models or run sure queries, based on dynamic consumer attributes like token utilization or subscription degree. One of the standout features of Permit.io’s ABAC (Attribute-Based Access Control) implementation is its capability to work with both cloud-based and native Policy Decision Points (PDPs).

In this case, the useful resource is a chatbot. In this case, viewers are restricted from performing the write action, which means they can not submit prompts to the chatbot. Entertainment and Games − ACT LIKE prompts could be employed in chat-based mostly video games or digital assistants to offer interactive experiences, the place users can interact with virtual characters. This helps streamline value efficiency, data protection, and dynamic actual-time access management, ensuring that your security insurance policies can adapt to evolving business wants. This node is accountable for performing a permission test using Permit.io’s ABAC policies before executing the LLM query. ABAC useful resource units permit for dynamic management over resource access based on attributes like length, query type, or quota. With Permit.io’s Attribute-Based Access Control (ABAC) policies, you possibly can build detailed rules that management who can use which models or run sure queries, based on dynamic consumer attributes like token utilization or subscription degree. One of the standout features of Permit.io’s ABAC (Attribute-Based Access Control) implementation is its capability to work with both cloud-based and native Policy Decision Points (PDPs).

It helps you to develop into extra innovative and adaptable to make your AI interactions work higher for you. Now, you are ready to put this knowledge to work. After testing the applying I was able to deploy it. Tonight was a great example, I decided I might try and construct a Wish List net application - it's coming as much as Christmas after all, and it was top of thoughts. I've tried to imagine what it would appear like, if non-developers were ready to build entire internet applications with out understanding web technologies, and that i provide you with so many the explanation why it would not work, even if future iterations of GPT do not hallucinate as a lot. Therefore, no matter whether you want to convert MBR to GPT, or GPT to MBR in Windows 11/10/8/7, it might probably ensure a successful conversion by keeping all partitions protected within the target disk. With this setup, you get a robust, reusable permission system embedded proper into your AI workflows, maintaining things secure, efficient, and scalable.

It helps you to develop into extra innovative and adaptable to make your AI interactions work higher for you. Now, you are ready to put this knowledge to work. After testing the applying I was able to deploy it. Tonight was a great example, I decided I might try and construct a Wish List net application - it's coming as much as Christmas after all, and it was top of thoughts. I've tried to imagine what it would appear like, if non-developers were ready to build entire internet applications with out understanding web technologies, and that i provide you with so many the explanation why it would not work, even if future iterations of GPT do not hallucinate as a lot. Therefore, no matter whether you want to convert MBR to GPT, or GPT to MBR in Windows 11/10/8/7, it might probably ensure a successful conversion by keeping all partitions protected within the target disk. With this setup, you get a robust, reusable permission system embedded proper into your AI workflows, maintaining things secure, efficient, and scalable.

Frequently I need to get feedback, input, or concepts from the viewers. It is an important talent for builders and anyone working with AI to get the outcomes they need. This offers extra management with regard to deployment for the developers while supporting ABAC in order that advanced permissions will be enforced. Developers should handle numerous PDF text extraction challenges, similar to AES encryption, watermarks, or gradual processing occasions, to make sure a easy user experience. The legal world must deal with AI training more like the photocopier, and fewer like a real human. This would permit me to change the useless IDs with the more useful titles on pdfs every time I take notes on them. A streaming based mostly implementation is a bit more involved. You can make changes within the code or within the chain implementation by including more security checks or permission checks for higher security and authentication providers on your LLM Model. Note: It is best to put in langflow and different required libraries in a specific python virtual setting (you possibly can create a python virtual setting utilizing pip or conda). For example, enabling a "Premium" subscriber to carry out queries while a "free chatgpr" subscriber might be limited, or if a person exists for using the system or not.

Premium users can run LLM queries with out limits. This part ensures that only authorized users can execute certain actions, akin to sending prompts to the LLM, based on their roles and attributes. Query token above 50 Characters: A resource set for users who've permission to submit prompts longer than 50 characters. The custom part ensures that solely authorized customers with the correct attributes can proceed to question the LLM. Once the permissions are validated, the subsequent node within the chain is the OpenAI node, which is configured to query an LLM from OpenAI’s API. Integrate with a database or API. Description: Free, easy, and intuitive on-line database diagram editor and SQL generator. Hint 10: Always use AI for producing database queries and schemas. When it transitions from producing reality to producing nonsense it doesn't give a warning that it has achieved so (and any reality it does generate is in a sense a minimum of partially unintentional). It’s additionally helpful for generating blog posts based mostly on form submissions with consumer ideas.

When you have almost any questions with regards to where by and also the best way to utilize chat gpt free, it is possible to e mail us with our own website.