A key perception from the paper is the self-evolution means of the model, illustrated in the above figure. The most important buzz is around Janus Pro 7B, the heavyweight of the new models, which DeepSeek says beats OpenAI’s DALL-E 3 and Stability AI’s Stable Diffusion XL on key performance tests. DeepSeek offers greater flexibility for tailored solutions attributable to its open-supply framework, making it preferable for users in search of particular adaptations. Specifically, in tasks corresponding to coding, math, science and logic reasoning, where clear solutions can define rewarding rules for the reinforcement studying process. To run reinforcement learning at a big scale, instead of utilizing the standard reinforcement studying with human or AI suggestions, a rule-primarily based reinforcement learning method is employed. Gathering massive-scale, excessive-quality human suggestions, particularly for complex tasks, is difficult. Incorporating a supervised nice-tuning section on this small, high-quality dataset helps DeepSeek-R1 mitigate the readability points noticed within the initial mannequin. These outcomes were validated as high-quality and readable.

A key perception from the paper is the self-evolution means of the model, illustrated in the above figure. The most important buzz is around Janus Pro 7B, the heavyweight of the new models, which DeepSeek says beats OpenAI’s DALL-E 3 and Stability AI’s Stable Diffusion XL on key performance tests. DeepSeek offers greater flexibility for tailored solutions attributable to its open-supply framework, making it preferable for users in search of particular adaptations. Specifically, in tasks corresponding to coding, math, science and logic reasoning, where clear solutions can define rewarding rules for the reinforcement studying process. To run reinforcement learning at a big scale, instead of utilizing the standard reinforcement studying with human or AI suggestions, a rule-primarily based reinforcement learning method is employed. Gathering massive-scale, excessive-quality human suggestions, particularly for complex tasks, is difficult. Incorporating a supervised nice-tuning section on this small, high-quality dataset helps DeepSeek-R1 mitigate the readability points noticed within the initial mannequin. These outcomes were validated as high-quality and readable.

DeepSeek-R1 achieves results on par with OpenAI's o1 mannequin on a number of benchmarks, including MATH-500 and SWE-bench. The Verge said "It's technologically impressive, even when the results sound like mushy versions of songs that may really feel acquainted", whereas Business Insider acknowledged "surprisingly, a number of the ensuing songs are catchy and sound respectable". The x-axis reveals the number of coaching steps, while the y-axis indicates that as training progresses, the model’s response lengths improve. Interestingly, an ablation examine reveals that guiding the model to be in step with one language slightly damages its efficiency. For RLAIF to work successfully, a extremely capable mannequin is required to provide correct feedback. Therefore, another frequent method is Reinforcement Learning from AI Feedback (RLAIF), the place an AI model provides the suggestions. Diverse Reinforcement Learning Phase (Phase 4): This final phase contains numerous tasks. Google's BERT, for instance, is an open-supply model broadly used for tasks like entity recognition and language translation, establishing itself as a versatile device in NLP. Let’s now explore a number of efficiency insights of the DeepSeek AI-R1-Zero model.

DeepSeek-R1 achieves results on par with OpenAI's o1 mannequin on a number of benchmarks, including MATH-500 and SWE-bench. The Verge said "It's technologically impressive, even when the results sound like mushy versions of songs that may really feel acquainted", whereas Business Insider acknowledged "surprisingly, a number of the ensuing songs are catchy and sound respectable". The x-axis reveals the number of coaching steps, while the y-axis indicates that as training progresses, the model’s response lengths improve. Interestingly, an ablation examine reveals that guiding the model to be in step with one language slightly damages its efficiency. For RLAIF to work successfully, a extremely capable mannequin is required to provide correct feedback. Therefore, another frequent method is Reinforcement Learning from AI Feedback (RLAIF), the place an AI model provides the suggestions. Diverse Reinforcement Learning Phase (Phase 4): This final phase contains numerous tasks. Google's BERT, for instance, is an open-supply model broadly used for tasks like entity recognition and language translation, establishing itself as a versatile device in NLP. Let’s now explore a number of efficiency insights of the DeepSeek AI-R1-Zero model.

In the above table from the paper, we see a comparison of DeepSeek-R1-Zero and OpenAI’s o1 on reasoning-related benchmarks. If the above was not sufficient, there’s another intriguing phenomenon referred to in the paper as the ‘Aha moment’ of DeepSeek-R1-Zero. The below example from the paper demonstrates this phenomenon. The world’s finest open weight mannequin might now be Chinese - that’s the takeaway from a current Tencent paper that introduces Hunyuan-Large, a MoE mannequin with 389 billion parameters (52 billion activated). The paper we’re reviewing at this time eliminates, or partially eliminates, the supervised tremendous-tuning stage. The supervised high-quality-tuning stage is completely omitted. Rejection Sampling and Supervised Fine-Tuning (Phase 3): In this phase, the mannequin checkpoint from part 2 is used to generate many samples. Supervised Fine-tuning: In this stage, the mannequin is fine-tuned on an instruction dataset. Additionally, varied smaller open-supply models have been distilled utilizing the dataset constructed in phase 3, providing smaller alternate options with excessive reasoning capabilities. DeepSeek-Coder-V2. Released in July 2024, this can be a 236 billion-parameter model providing a context window of 128,000 tokens, designed for complex coding challenges. Through reinforcement studying, the mannequin naturally learns to allocate extra considering time when fixing reasoning duties.

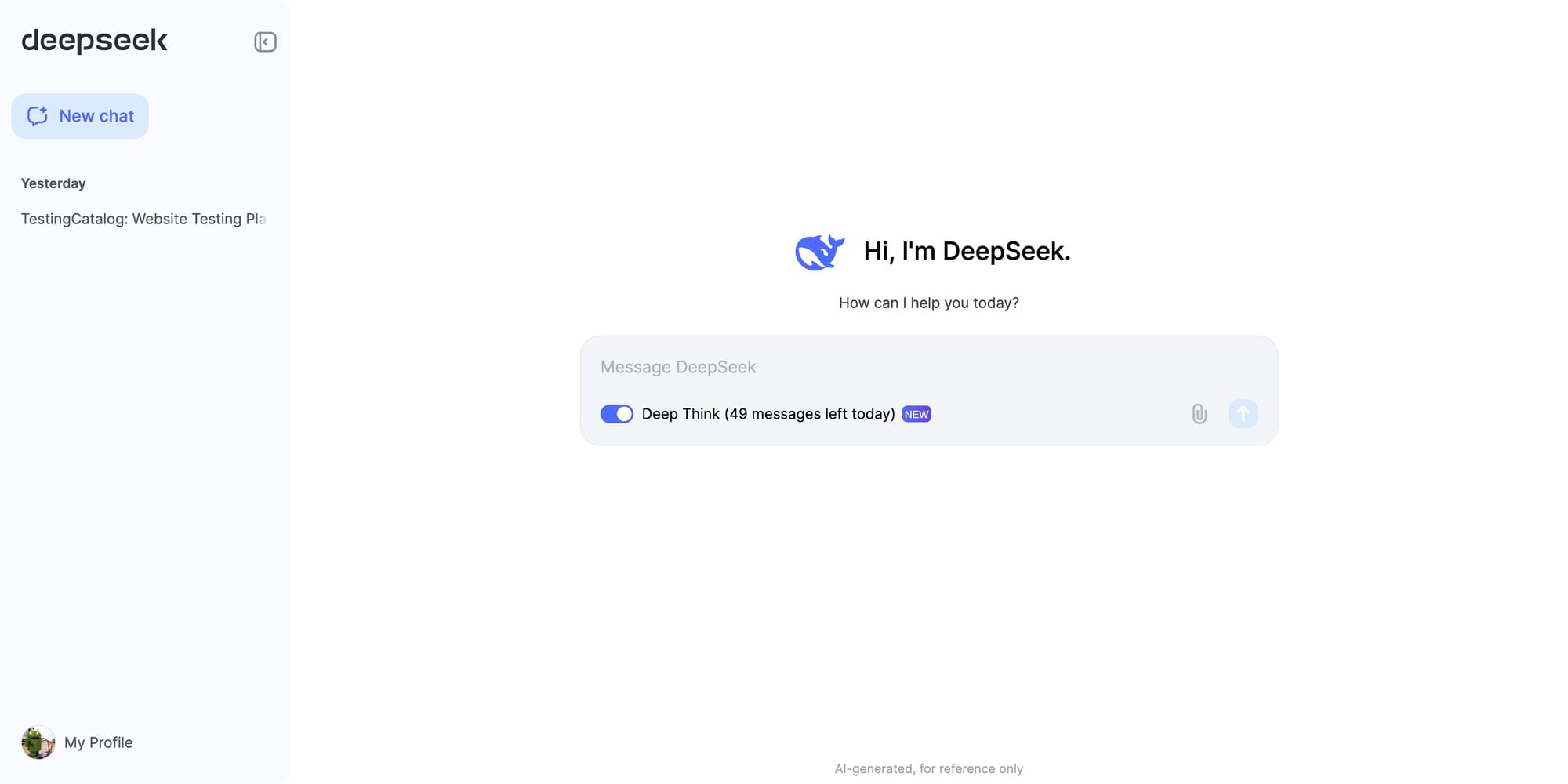

The mannequin learns to reevaluate its preliminary method and proper itself if needed. Notably, the typical move@1 score on AIME significantly will increase, jumping from an preliminary 15.6% to a formidable 71.0%, reaching ranges comparable to OpenAI’s o1! This suggests people may have some advantage at initial calibration of AI programs, however the AI programs can most likely naively optimize themselves higher than a human, given a long sufficient amount of time. Once you’re done experimenting, you possibly can register the selected mannequin within the AI Console, which is the hub for your whole mannequin deployments. Within the below figure from the paper, we will see how the model is instructed to reply, with its reasoning process inside tags and the answer inside tags. And though there are limitations to this (LLMs nonetheless won't be able to assume past its coaching information), it’s after all vastly worthwhile and means we are able to truly use them for actual world duties.

Should you loved this short article and you want to receive much more information about ديب سيك assure visit our own web-page.