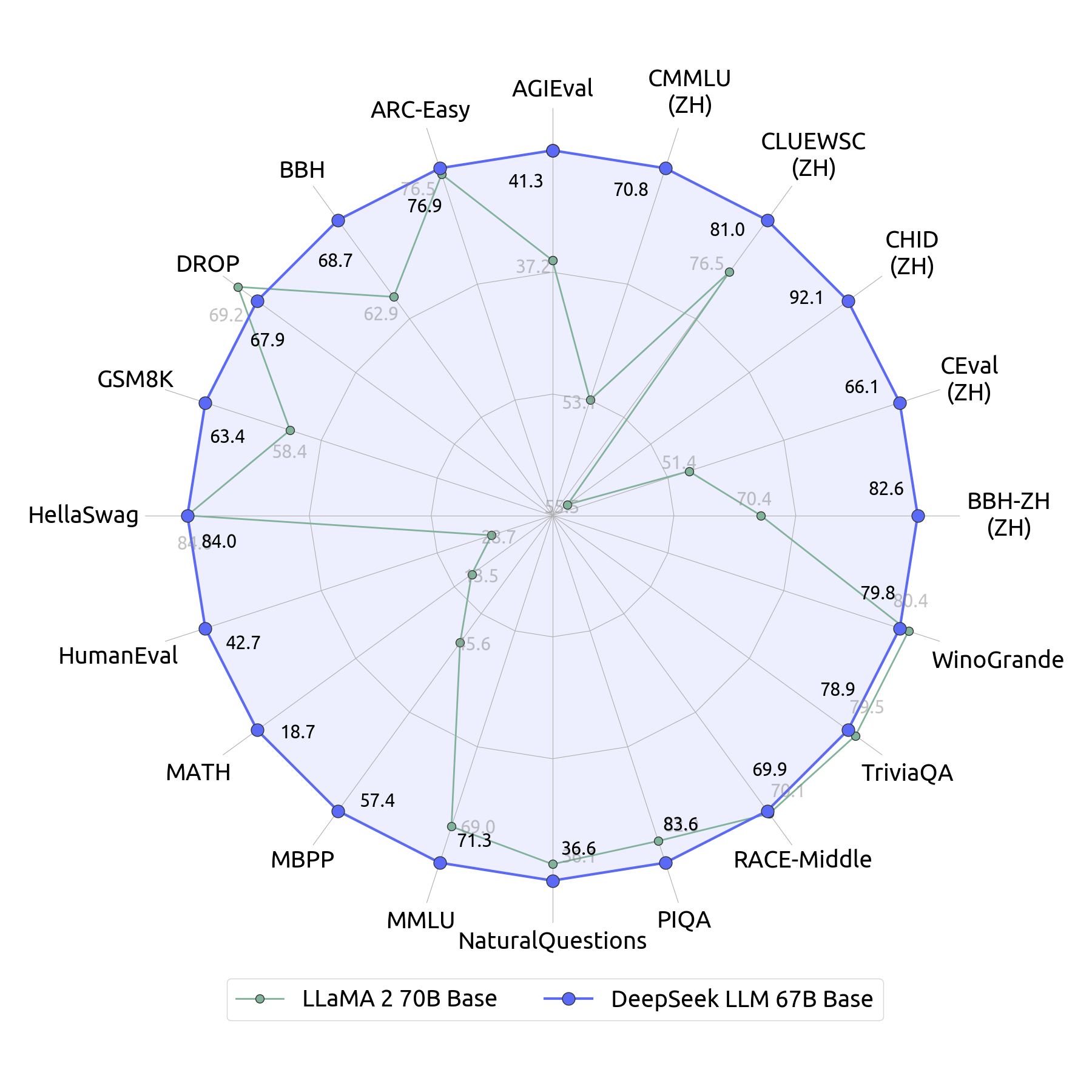

Compared with DeepSeek 67B, DeepSeek-V2 achieves significantly stronger performance, and in the meantime saves 42.5% of training costs, reduces the KV cache by 93.3%, and boosts the maximum technology throughput to 5.76 instances. One factor to take into consideration because the strategy to building high quality training to teach people Chapel is that in the intervening time the very best code generator for different programming languages is Deepseek Coder 2.1 which is freely available to make use of by individuals. That’s far harder - and with distributed coaching, these people could train fashions as properly. By far probably the most interesting detail although is how much the coaching cost. The 7B mannequin's training involved a batch measurement of 2304 and a studying rate of 4.2e-4 and the 67B mannequin was educated with a batch measurement of 4608 and a learning rate of 3.2e-4. We make use of a multi-step learning rate schedule in our coaching process. We instantly apply reinforcement studying (RL) to the bottom model without relying on supervised wonderful-tuning (SFT) as a preliminary step. Distilled models have been skilled by SFT on 800K knowledge synthesized from DeepSeek-R1, in an identical means as step 3 above. Step 1: Install WasmEdge by way of the following command line.

Then, use the following command strains to start out an API server for the model. From another terminal, you'll be able to work together with the API server utilizing curl. You can even interact with the API server using curl from one other terminal . Generate and Pray: Using SALLMS to guage the safety of LLM Generated Code. The research neighborhood is granted entry to the open-supply variations, DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat. DeepSeek LLM 7B/67B models, together with base and chat versions, are launched to the public on GitHub, Hugging Face and likewise AWS S3. The DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat versions have been made open source, aiming to assist research efforts in the sector. DeepSeek focuses on growing open supply LLMs. I’ll be sharing more soon on the right way to interpret the stability of power in open weight language fashions between the U.S. The low-price growth threatens the enterprise mannequin of U.S. The export of the highest-performance AI accelerator and GPU chips from the U.S. Additionally it is a cross-platform portable Wasm app that may run on many CPU and GPU gadgets. Emergent behavior network. DeepSeek's emergent habits innovation is the discovery that complicated reasoning patterns can develop naturally by means of reinforcement learning without explicitly programming them.

Then, use the following command strains to start out an API server for the model. From another terminal, you'll be able to work together with the API server utilizing curl. You can even interact with the API server using curl from one other terminal . Generate and Pray: Using SALLMS to guage the safety of LLM Generated Code. The research neighborhood is granted entry to the open-supply variations, DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat. DeepSeek LLM 7B/67B models, together with base and chat versions, are launched to the public on GitHub, Hugging Face and likewise AWS S3. The DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat versions have been made open source, aiming to assist research efforts in the sector. DeepSeek focuses on growing open supply LLMs. I’ll be sharing more soon on the right way to interpret the stability of power in open weight language fashions between the U.S. The low-price growth threatens the enterprise mannequin of U.S. The export of the highest-performance AI accelerator and GPU chips from the U.S. Additionally it is a cross-platform portable Wasm app that may run on many CPU and GPU gadgets. Emergent behavior network. DeepSeek's emergent habits innovation is the discovery that complicated reasoning patterns can develop naturally by means of reinforcement learning without explicitly programming them.

Reward engineering is the technique of designing the incentive system that guides an AI mannequin's learning throughout training. That is, Tesla has bigger compute, a larger AI staff, testing infrastructure, access to nearly unlimited training knowledge, and the ability to provide millions of function-built robotaxis in a short time and cheaply. DeepSeek-V2. Released in May 2024, this is the second model of the company's LLM, specializing in strong performance and lower coaching costs. DeepSeek LLM. Released in December 2023, this is the first model of the company's basic-objective model. That’s all. WasmEdge is best, quickest, and safest option to run LLM purposes. AI startup Prime Intellect has trained and released INTELLECT-1, a 1B mannequin educated in a decentralized means. I just lately had the chance to make use of DeepSeek, and I have to say, it has utterly transformed the way I method information analysis and resolution-making. DeepSeek-LLM-7B-Chat is a sophisticated language model trained by DeepSeek, a subsidiary firm of High-flyer quant, comprising 7 billion parameters. DeepSeek, being a Chinese company, is subject to benchmarking by China’s internet regulator to make sure its models’ responses "embody core socialist values." Many Chinese AI programs decline to respond to subjects which may elevate the ire of regulators, like hypothesis concerning the Xi Jinping regime.

These present fashions, while don’t really get things correct always, do provide a pretty handy software and in conditions the place new territory / new apps are being made, I think they could make significant progress. DeepSeek Coder fashions are trained with a 16,000 token window size and an additional fill-in-the-clean activity to enable undertaking-stage code completion and infilling. DeepSeek-Coder-6.7B is amongst DeepSeek Coder sequence of giant code language fashions, pre-educated on 2 trillion tokens of 87% code and 13% natural language text. Therefore, though this code was human-written, it would be much less stunning to the LLM, therefore decreasing the Binoculars rating and reducing classification accuracy. All reward capabilities had been rule-based mostly, "mainly" of two sorts (other varieties were not specified): accuracy rewards and format rewards. This knowledge includes useful and impartial human directions, structured by the Alpaca Instruction format. It includes 236B total parameters, of which 21B are activated for every token, and supports a context size of 128K tokens.