Why is DeepSeek such a giant deal? This is an enormous deal as a result of it says that if you want to control AI programs it's essential not only control the fundamental sources (e.g, compute, electricity), but additionally the platforms the techniques are being served on (e.g., proprietary websites) so that you simply don’t leak the actually beneficial stuff - samples together with chains of thought from reasoning fashions. The Know Your AI system in your classifier assigns a high diploma of confidence to the likelihood that your system was attempting to bootstrap itself beyond the ability for different AI methods to watch it. deepseek ai china-Prover-V1.5 is a system that combines reinforcement learning and Monte-Carlo Tree Search to harness the suggestions from proof assistants for improved theorem proving. The paper presents the technical particulars of this system and evaluates its efficiency on difficult mathematical problems. This is a Plain English Papers summary of a research paper referred to as DeepSeek-Prover advances theorem proving by reinforcement learning and Monte-Carlo Tree Search with proof assistant feedbac. The important thing contributions of the paper embrace a novel strategy to leveraging proof assistant feedback and developments in reinforcement learning and search algorithms for theorem proving. DeepSeek-Prover-V1.5 goals to handle this by combining two highly effective techniques: reinforcement studying and Monte-Carlo Tree Search.

Why is DeepSeek such a giant deal? This is an enormous deal as a result of it says that if you want to control AI programs it's essential not only control the fundamental sources (e.g, compute, electricity), but additionally the platforms the techniques are being served on (e.g., proprietary websites) so that you simply don’t leak the actually beneficial stuff - samples together with chains of thought from reasoning fashions. The Know Your AI system in your classifier assigns a high diploma of confidence to the likelihood that your system was attempting to bootstrap itself beyond the ability for different AI methods to watch it. deepseek ai china-Prover-V1.5 is a system that combines reinforcement learning and Monte-Carlo Tree Search to harness the suggestions from proof assistants for improved theorem proving. The paper presents the technical particulars of this system and evaluates its efficiency on difficult mathematical problems. This is a Plain English Papers summary of a research paper referred to as DeepSeek-Prover advances theorem proving by reinforcement learning and Monte-Carlo Tree Search with proof assistant feedbac. The important thing contributions of the paper embrace a novel strategy to leveraging proof assistant feedback and developments in reinforcement learning and search algorithms for theorem proving. DeepSeek-Prover-V1.5 goals to handle this by combining two highly effective techniques: reinforcement studying and Monte-Carlo Tree Search.

The second model receives the generated steps and the schema definition, combining the information for SQL technology. 7b-2: This mannequin takes the steps and schema definition, translating them into corresponding SQL code. 2. Initializing AI Models: It creates cases of two AI fashions: - @hf/thebloke/deepseek-coder-6.7b-base-awq: This mannequin understands pure language directions and generates the steps in human-readable format. Exploring AI Models: I explored Cloudflare's AI models to find one that could generate pure language instructions based on a given schema. The application demonstrates multiple AI fashions from Cloudflare's AI platform. I built a serverless utility using Cloudflare Workers and Hono, a lightweight internet framework for Cloudflare Workers. The appliance is designed to generate steps for inserting random data into a PostgreSQL database and then convert these steps into SQL queries. The second model, @cf/defog/sqlcoder-7b-2, converts these steps into SQL queries. 2. SQL Query Generation: It converts the generated steps into SQL queries. Integration and Orchestration: I carried out the logic to process the generated instructions and convert them into SQL queries. 3. API Endpoint: It exposes an API endpoint (/generate-information) that accepts a schema and returns the generated steps and SQL queries.

Ensuring the generated SQL scripts are functional and adhere to the DDL and data constraints. These minimize downs should not able to be end use checked both and could potentially be reversed like Nvidia’s former crypto mining limiters, if the HW isn’t fused off. And since extra people use you, you get more data. Get the dataset and code right here (BioPlanner, GitHub). The founders of Anthropic used to work at OpenAI and, deep seek if you happen to look at Claude, Claude is unquestionably on GPT-3.5 degree as far as performance, however they couldn’t get to GPT-4. Nothing specific, I rarely work with SQL today. 4. Returning Data: The operate returns a JSON response containing the generated steps and the corresponding SQL code. This is achieved by leveraging Cloudflare's AI models to understand and generate pure language directions, that are then transformed into SQL commands. 9. In order for you any custom settings, set them after which click Save settings for this model adopted by Reload the Model in the top right.

Ensuring the generated SQL scripts are functional and adhere to the DDL and data constraints. These minimize downs should not able to be end use checked both and could potentially be reversed like Nvidia’s former crypto mining limiters, if the HW isn’t fused off. And since extra people use you, you get more data. Get the dataset and code right here (BioPlanner, GitHub). The founders of Anthropic used to work at OpenAI and, deep seek if you happen to look at Claude, Claude is unquestionably on GPT-3.5 degree as far as performance, however they couldn’t get to GPT-4. Nothing specific, I rarely work with SQL today. 4. Returning Data: The operate returns a JSON response containing the generated steps and the corresponding SQL code. This is achieved by leveraging Cloudflare's AI models to understand and generate pure language directions, that are then transformed into SQL commands. 9. In order for you any custom settings, set them after which click Save settings for this model adopted by Reload the Model in the top right.

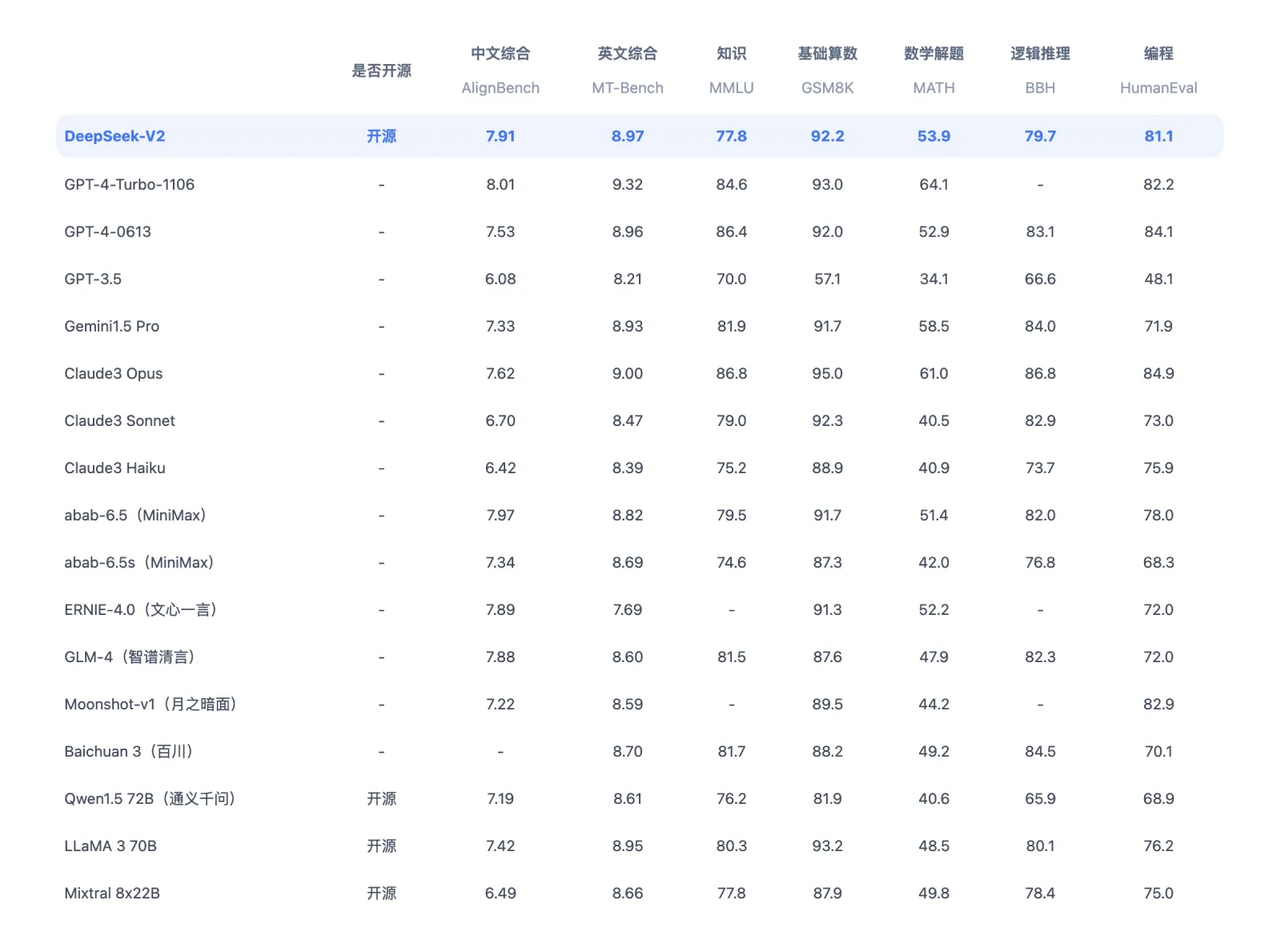

372) - and, as is traditional in SV, takes a number of the ideas, recordsdata the serial numbers off, gets tons about it unsuitable, and then re-represents it as its own. Models are launched as sharded safetensors recordsdata. This repo accommodates AWQ model files for DeepSeek's Deepseek Coder 6.7B Instruct. The DeepSeek V2 Chat and DeepSeek Coder V2 models have been merged and upgraded into the new model, DeepSeek V2.5. So you possibly can have completely different incentives. PanGu-Coder2 also can present coding assistance, debug code, and suggest optimizations. Step 1: Initially pre-trained with a dataset consisting of 87% code, 10% code-related language (Github Markdown and StackExchange), and 3% non-code-related Chinese language. Next, we accumulate a dataset of human-labeled comparisons between outputs from our models on a bigger set of API prompts. Have you ever arrange agentic workflows? I'm interested by establishing agentic workflow with instructor. I believe Instructor uses OpenAI SDK, so it must be possible. It makes use of a closure to multiply the end result by every integer from 1 as much as n. When utilizing vLLM as a server, move the --quantization awq parameter. On this regard, if a mannequin's outputs successfully go all take a look at cases, the model is considered to have successfully solved the problem.

If you liked this posting and you would like to get extra details relating to ديب سيك kindly take a look at our own web-site.