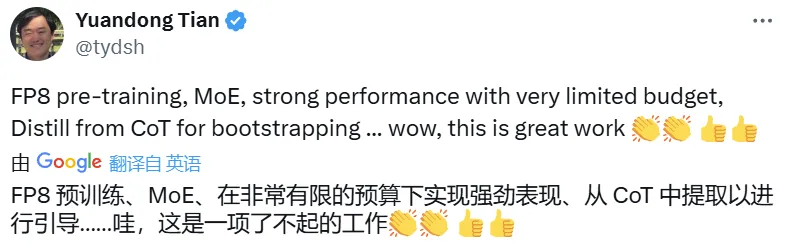

This sounds quite a bit like what OpenAI did for o1: DeepSeek started the mannequin out with a bunch of examples of chain-of-thought considering so it could study the correct format for human consumption, after which did the reinforcement studying to enhance its reasoning, deepseek together with quite a few enhancing and refinement steps; the output is a mannequin that appears to be very aggressive with o1. Meanwhile, we additionally maintain a control over the output model and size of DeepSeek-V3. The final time the create-react-app bundle was updated was on April 12 2022 at 1:33 EDT, Deepseek, diaspora.mifritscher.de, which by all accounts as of writing this, is over 2 years in the past. Following this, we perform reasoning-oriented RL like DeepSeek-R1-Zero. This strategy permits the mannequin to explore chain-of-thought (CoT) for solving complex problems, resulting in the development of DeepSeek-R1-Zero. During this phase, deepseek ai-R1-Zero learns to allocate more thinking time to an issue by reevaluating its preliminary approach. A particularly intriguing phenomenon noticed throughout the training of DeepSeek-R1-Zero is the prevalence of an "aha moment". The "aha moment" serves as a strong reminder of the potential of RL to unlock new ranges of intelligence in artificial programs, paving the best way for more autonomous and adaptive models in the future.

Here again it appears plausible that DeepSeek benefited from distillation, particularly in terms of coaching R1. How does DeepSeek examine here? The technique to interpret each discussions should be grounded in the fact that the DeepSeek V3 mannequin is extremely good on a per-FLOP comparability to peer models (probably even some closed API models, extra on this under). It underscores the facility and beauty of reinforcement learning: slightly than explicitly educating the mannequin on how to resolve a problem, we merely present it with the right incentives, and it autonomously develops superior downside-solving strategies. That, though, is itself an vital takeaway: now we have a scenario where AI models are educating AI fashions, and the place AI fashions are teaching themselves. This overlap ensures that, as the model further scales up, as long as we maintain a relentless computation-to-communication ratio, we will still make use of superb-grained consultants throughout nodes whereas reaching a near-zero all-to-all communication overhead.

Resurrection logs: They started as an idiosyncratic type of model capability exploration, then grew to become a tradition amongst most experimentalists, then turned into a de facto convention. R1 is aggressive with o1, although there do seem to be some holes in its functionality that time towards some quantity of distillation from o1-Pro. If we get it improper, we’re going to be dealing with inequality on steroids - a small caste of people will be getting an enormous amount completed, aided by ghostly superintelligences that work on their behalf, while a larger set of individuals watch the success of others and ask ‘why not me? Because it'll change by nature of the work that they’re doing. Execute the code and let the agent do the be just right for you. The basic example is AlphaGo, where DeepMind gave the mannequin the rules of Go with the reward operate of winning the game, and then let the model determine the whole lot else by itself.