It is best to understand that Tesla is in a better position than the Chinese to take advantage of new techniques like those used by DeepSeek. This approach ensures that the quantization process can better accommodate outliers by adapting the size based on smaller teams of elements. As illustrated in Figure 7 (a), (1) for activations, we group and scale parts on a 1x128 tile foundation (i.e., per token per 128 channels); and (2) for weights, we group and scale elements on a 128x128 block basis (i.e., per 128 enter channels per 128 output channels). POSTSUBscript components. The related dequantization overhead is essentially mitigated under our increased-precision accumulation process, a important facet for reaching accurate FP8 General Matrix Multiplication (GEMM). As talked about earlier than, our fine-grained quantization applies per-group scaling elements along the inside dimension K. These scaling factors can be effectively multiplied on the CUDA Cores as the dequantization course of with minimal extra computational value. FP16 makes use of half the reminiscence compared to FP32, which implies the RAM necessities for FP16 models can be approximately half of the FP32 necessities. In distinction to the hybrid FP8 format adopted by prior work (NVIDIA, 2024b; Peng et al., 2023b; Sun et al., 2019b), which uses E4M3 (4-bit exponent and ديب سيك 3-bit mantissa) in Fprop and E5M2 (5-bit exponent and 2-bit mantissa) in Dgrad and Wgrad, we adopt the E4M3 format on all tensors for increased precision.

In low-precision coaching frameworks, overflows and underflows are widespread challenges because of the limited dynamic range of the FP8 format, which is constrained by its decreased exponent bits. By operating on smaller aspect teams, our methodology successfully shares exponent bits among these grouped parts, mitigating the influence of the limited dynamic vary. 128 elements, equal to 4 WGMMAs, represents the minimal accumulation interval that can considerably improve precision without introducing substantial overhead. While these excessive-precision elements incur some reminiscence overheads, their affect could be minimized by way of environment friendly sharding throughout multiple DP ranks in our distributed training system. Applications: Gen2 is a sport-changer throughout multiple domains: it’s instrumental in producing partaking advertisements, demos, and explainer movies for advertising and marketing; creating concept artwork and scenes in filmmaking and animation; growing academic and training videos; and producing captivating content material for social media, entertainment, and interactive experiences. By leveraging the flexibleness of Open WebUI, I've been ready to interrupt free from the shackles of proprietary chat platforms and take my AI experiences to the next stage. DeepSeekMath: Pushing the limits of Mathematical Reasoning in Open Language and AutoCoder: Enhancing Code with Large Language Models are associated papers that discover related themes and advancements in the sphere of code intelligence.

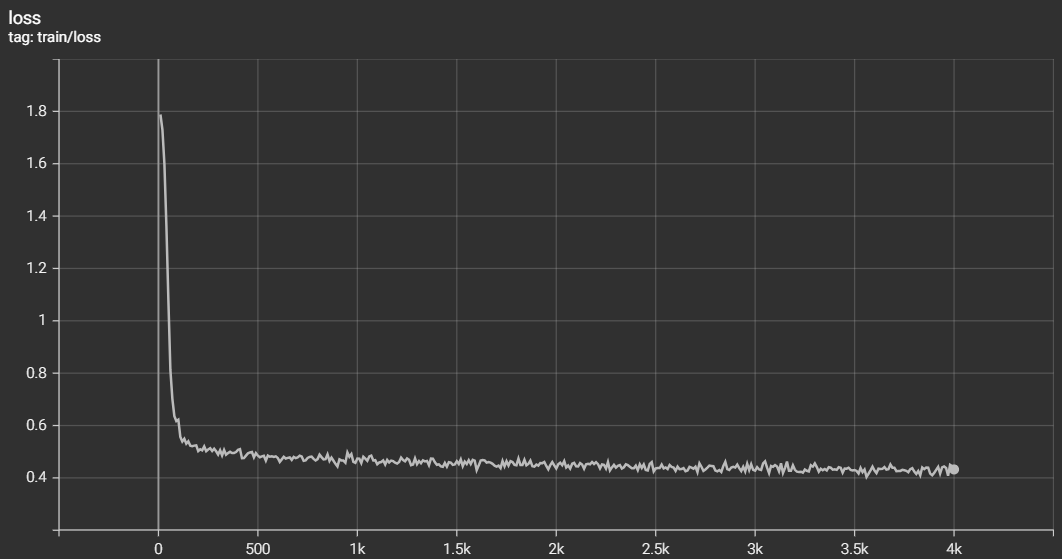

The paper presents a compelling method to bettering the mathematical reasoning capabilities of massive language fashions, and the results achieved by DeepSeekMath 7B are impressive. We introduce an modern methodology to distill reasoning capabilities from the long-Chain-of-Thought (CoT) model, specifically from one of the deepseek ai R1 collection fashions, into customary LLMs, notably DeepSeek-V3. A promising direction is using giant language models (LLM), which have proven to have good reasoning capabilities when skilled on giant corpora of text and math. FP8-LM: Training FP8 massive language fashions. This downside will change into extra pronounced when the interior dimension K is massive (Wortsman et al., 2023), a typical scenario in giant-scale model coaching where the batch dimension and mannequin width are elevated. During coaching, we preserve the Exponential Moving Average (EMA) of the mannequin parameters for early estimation of the model performance after studying fee decay. However, when i started learning Grid, all of it modified. However, the factors defining what constitutes an "acute" or "national security risk" are considerably elastic. However, in non-democratic regimes or countries with limited freedoms, particularly autocracies, the answer turns into Disagree because the federal government could have completely different standards and restrictions on what constitutes acceptable criticism.

The paper presents a compelling method to bettering the mathematical reasoning capabilities of massive language fashions, and the results achieved by DeepSeekMath 7B are impressive. We introduce an modern methodology to distill reasoning capabilities from the long-Chain-of-Thought (CoT) model, specifically from one of the deepseek ai R1 collection fashions, into customary LLMs, notably DeepSeek-V3. A promising direction is using giant language models (LLM), which have proven to have good reasoning capabilities when skilled on giant corpora of text and math. FP8-LM: Training FP8 massive language fashions. This downside will change into extra pronounced when the interior dimension K is massive (Wortsman et al., 2023), a typical scenario in giant-scale model coaching where the batch dimension and mannequin width are elevated. During coaching, we preserve the Exponential Moving Average (EMA) of the mannequin parameters for early estimation of the model performance after studying fee decay. However, when i started learning Grid, all of it modified. However, the factors defining what constitutes an "acute" or "national security risk" are considerably elastic. However, in non-democratic regimes or countries with limited freedoms, particularly autocracies, the answer turns into Disagree because the federal government could have completely different standards and restrictions on what constitutes acceptable criticism.

However, the master weights (stored by the optimizer) and gradients (used for batch dimension accumulation) are still retained in FP32 to make sure numerical stability throughout coaching. It's a must to have the code that matches it up and typically you possibly can reconstruct it from the weights. In Appendix B.2, we further focus on the coaching instability once we group and scale activations on a block basis in the same manner as weights quantization. Comparing their technical reviews, DeepSeek seems probably the most gung-ho about security coaching: along with gathering security knowledge that include "various delicate topics," DeepSeek additionally established a twenty-person group to assemble take a look at cases for a variety of safety classes, while listening to altering methods of inquiry so that the fashions wouldn't be "tricked" into offering unsafe responses. Made by stable code authors using the bigcode-analysis-harness take a look at repo. These focused retentions of excessive precision ensure stable coaching dynamics for DeepSeek-V3. Because of this, after careful investigations, we maintain the unique precision (e.g., BF16 or FP32) for the next components: the embedding module, the output head, MoE gating modules, normalization operators, and a focus operators.

However, the master weights (stored by the optimizer) and gradients (used for batch dimension accumulation) are still retained in FP32 to make sure numerical stability throughout coaching. It's a must to have the code that matches it up and typically you possibly can reconstruct it from the weights. In Appendix B.2, we further focus on the coaching instability once we group and scale activations on a block basis in the same manner as weights quantization. Comparing their technical reviews, DeepSeek seems probably the most gung-ho about security coaching: along with gathering security knowledge that include "various delicate topics," DeepSeek additionally established a twenty-person group to assemble take a look at cases for a variety of safety classes, while listening to altering methods of inquiry so that the fashions wouldn't be "tricked" into offering unsafe responses. Made by stable code authors using the bigcode-analysis-harness take a look at repo. These focused retentions of excessive precision ensure stable coaching dynamics for DeepSeek-V3. Because of this, after careful investigations, we maintain the unique precision (e.g., BF16 or FP32) for the next components: the embedding module, the output head, MoE gating modules, normalization operators, and a focus operators.

If you enjoyed this short article and you would certainly such as to get more facts pertaining to ديب سيك kindly go to our own internet site.