What makes DeepSeek so particular is the company's claim that it was constructed at a fraction of the price of business-leading models like OpenAI - because it makes use of fewer superior chips. DeepSeek represents the most recent problem to OpenAI, which established itself as an business chief with the debut of ChatGPT in 2022. OpenAI has helped push the generative AI trade ahead with its GPT family of fashions, in addition to its o1 class of reasoning fashions. Additionally, we leverage the IBGDA (NVIDIA, 2022) know-how to further reduce latency and enhance communication effectivity. NVIDIA (2022) NVIDIA. Improving network performance of HPC systems using NVIDIA Magnum IO NVSHMEM and GPUDirect Async. As well as to plain benchmarks, we also evaluate our models on open-ended generation duties using LLMs as judges, with the outcomes proven in Table 7. Specifically, we adhere to the unique configurations of AlpacaEval 2.0 (Dubois et al., 2024) and Arena-Hard (Li et al., 2024a), which leverage GPT-4-Turbo-1106 as judges for pairwise comparisons. To be particular, in our experiments with 1B MoE fashions, the validation losses are: 2.258 (using a sequence-smart auxiliary loss), 2.253 (utilizing the auxiliary-loss-free methodology), and 2.253 (using a batch-smart auxiliary loss).

What makes DeepSeek so particular is the company's claim that it was constructed at a fraction of the price of business-leading models like OpenAI - because it makes use of fewer superior chips. DeepSeek represents the most recent problem to OpenAI, which established itself as an business chief with the debut of ChatGPT in 2022. OpenAI has helped push the generative AI trade ahead with its GPT family of fashions, in addition to its o1 class of reasoning fashions. Additionally, we leverage the IBGDA (NVIDIA, 2022) know-how to further reduce latency and enhance communication effectivity. NVIDIA (2022) NVIDIA. Improving network performance of HPC systems using NVIDIA Magnum IO NVSHMEM and GPUDirect Async. As well as to plain benchmarks, we also evaluate our models on open-ended generation duties using LLMs as judges, with the outcomes proven in Table 7. Specifically, we adhere to the unique configurations of AlpacaEval 2.0 (Dubois et al., 2024) and Arena-Hard (Li et al., 2024a), which leverage GPT-4-Turbo-1106 as judges for pairwise comparisons. To be particular, in our experiments with 1B MoE fashions, the validation losses are: 2.258 (using a sequence-smart auxiliary loss), 2.253 (utilizing the auxiliary-loss-free methodology), and 2.253 (using a batch-smart auxiliary loss).

The key distinction between auxiliary-loss-free balancing and sequence-smart auxiliary loss lies of their balancing scope: batch-sensible versus sequence-smart. Xin believes that artificial information will play a key role in advancing LLMs. One key modification in our methodology is the introduction of per-group scaling elements alongside the inner dimension of GEMM operations. As a typical follow, the input distribution is aligned to the representable range of the FP8 format by scaling the utmost absolute worth of the input tensor to the maximum representable value of FP8 (Narang et al., 2017). This method makes low-precision coaching extremely sensitive to activation outliers, which can heavily degrade quantization accuracy. We attribute the feasibility of this method to our effective-grained quantization strategy, i.e., tile and block-clever scaling. Overall, below such a communication technique, only 20 SMs are adequate to totally utilize the bandwidths of IB and NVLink. In this overlapping strategy, we will be sure that both all-to-all and PP communication might be absolutely hidden during execution. Alternatively, a close to-memory computing strategy might be adopted, the place compute logic is placed near the HBM. By 27 January 2025 the app had surpassed ChatGPT as the very best-rated free app on the iOS App Store in the United States; its chatbot reportedly answers questions, solves logic problems and writes pc applications on par with other chatbots available on the market, based on benchmark tests used by American A.I.

The key distinction between auxiliary-loss-free balancing and sequence-smart auxiliary loss lies of their balancing scope: batch-sensible versus sequence-smart. Xin believes that artificial information will play a key role in advancing LLMs. One key modification in our methodology is the introduction of per-group scaling elements alongside the inner dimension of GEMM operations. As a typical follow, the input distribution is aligned to the representable range of the FP8 format by scaling the utmost absolute worth of the input tensor to the maximum representable value of FP8 (Narang et al., 2017). This method makes low-precision coaching extremely sensitive to activation outliers, which can heavily degrade quantization accuracy. We attribute the feasibility of this method to our effective-grained quantization strategy, i.e., tile and block-clever scaling. Overall, below such a communication technique, only 20 SMs are adequate to totally utilize the bandwidths of IB and NVLink. In this overlapping strategy, we will be sure that both all-to-all and PP communication might be absolutely hidden during execution. Alternatively, a close to-memory computing strategy might be adopted, the place compute logic is placed near the HBM. By 27 January 2025 the app had surpassed ChatGPT as the very best-rated free app on the iOS App Store in the United States; its chatbot reportedly answers questions, solves logic problems and writes pc applications on par with other chatbots available on the market, based on benchmark tests used by American A.I.

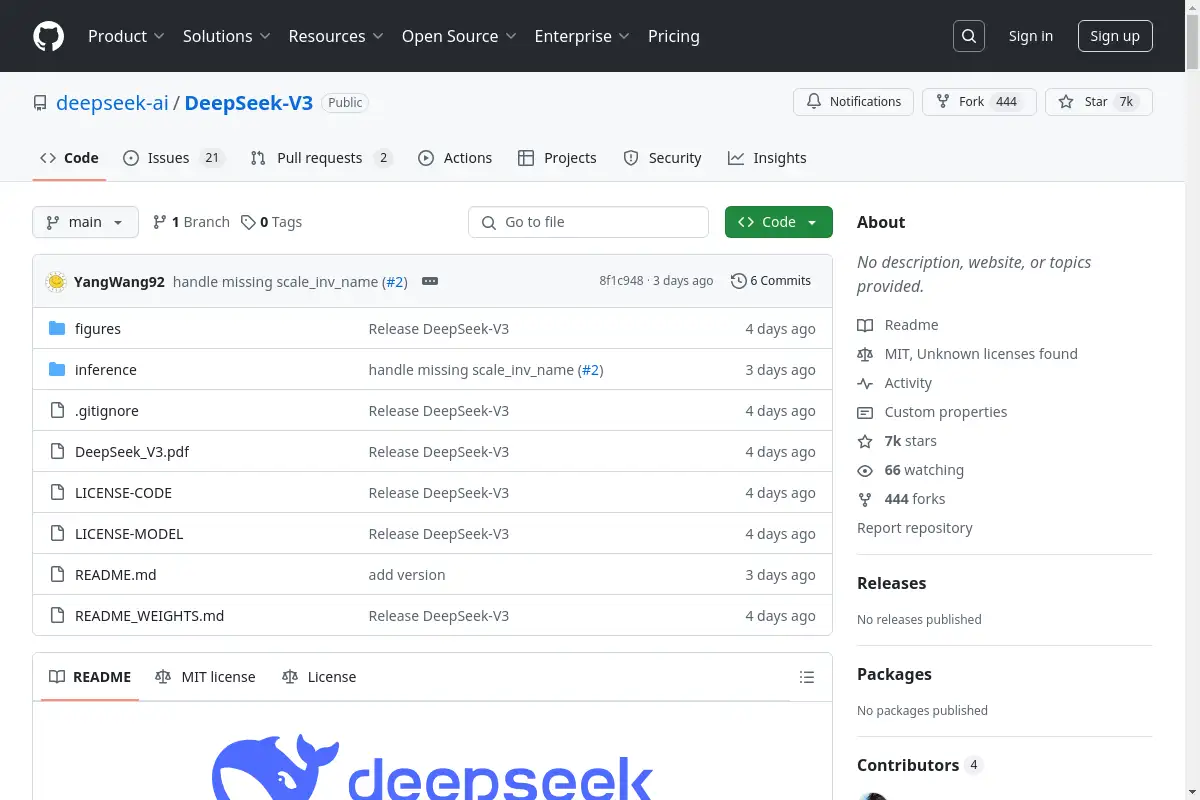

Open supply and free for analysis and business use. Some consultants worry that the federal government of China might use the A.I. The Chinese government adheres to the One-China Principle, and any makes an attempt to break up the nation are doomed to fail. Their hyper-parameters to regulate the power of auxiliary losses are the same as DeepSeek-V2-Lite and DeepSeek-V2, respectively. To additional examine the correlation between this flexibility and the benefit in model efficiency, we moreover design and validate a batch-smart auxiliary loss that encourages load steadiness on every training batch instead of on every sequence. POSTSUPERscript. During coaching, every single sequence is packed from multiple samples. • Forwarding data between the IB (InfiniBand) and NVLink domain while aggregating IB site visitors destined for a number of GPUs within the same node from a single GPU. We curate our instruction-tuning datasets to include 1.5M situations spanning a number of domains, with every area using distinct information creation methods tailored to its specific requirements. Also, our data processing pipeline is refined to reduce redundancy whereas sustaining corpus diversity. The base model of DeepSeek-V3 is pretrained on a multilingual corpus with English and Chinese constituting the majority, so we consider its performance on a collection of benchmarks primarily in English and Chinese, in addition to on a multilingual benchmark.

Notably, our effective-grained quantization technique is very in line with the concept of microscaling formats (Rouhani et al., 2023b), whereas the Tensor Cores of NVIDIA next-technology GPUs (Blackwell series) have introduced the support for microscaling codecs with smaller quantization granularity (NVIDIA, 2024a). We hope our design can function a reference for future work to keep tempo with the latest GPU architectures. For each token, when its routing decision is made, it would first be transmitted by way of IB to the GPUs with the identical in-node index on its target nodes. AMD GPU: Enables operating the DeepSeek-V3 mannequin on AMD GPUs by way of SGLang in both BF16 and FP8 modes. The deepseek-chat model has been upgraded to DeepSeek-V3. The deepseek-chat mannequin has been upgraded to DeepSeek-V2.5-1210, with improvements across varied capabilities. Additionally, we are going to strive to interrupt by means of the architectural limitations of Transformer, thereby pushing the boundaries of its modeling capabilities. Additionally, DeepSeek-V2.5 has seen significant enhancements in duties resembling writing and instruction-following. Additionally, the FP8 Wgrad GEMM permits activations to be saved in FP8 to be used within the backward pass. These activations are also saved in FP8 with our fantastic-grained quantization methodology, putting a balance between reminiscence effectivity and computational accuracy.

If you cherished this short article and you would like to obtain extra data pertaining to ديب سيك kindly stop by the website.