DeepSeek vs ChatGPT - how do they examine? In recent times, it has turn out to be best identified because the tech behind chatbots comparable to ChatGPT - and DeepSeek - also known as generative AI. In short, DeepSeek feels very very similar to ChatGPT without all the bells and whistles. Send a take a look at message like "hello" and examine if you may get response from the Ollama server. Vite (pronounced somewhere between vit and veet since it's the French phrase for "Fast") is a direct alternative for create-react-app's features, in that it gives a fully configurable growth atmosphere with a sizzling reload server and loads of plugins. This approach permits the mannequin to explore chain-of-thought (CoT) for fixing complicated issues, resulting in the event of DeepSeek-R1-Zero. Note: this model is bilingual in English and Chinese. Why this matters - compute is the one factor standing between Chinese AI companies and the frontier labs in the West: This interview is the latest instance of how access to compute is the one remaining factor that differentiates Chinese labs from Western labs. He focuses on reporting on all the pieces to do with AI and has appeared on BBC Tv reveals like BBC One Breakfast and on Radio four commenting on the latest developments in tech.

DeepSeek vs ChatGPT - how do they examine? In recent times, it has turn out to be best identified because the tech behind chatbots comparable to ChatGPT - and DeepSeek - also known as generative AI. In short, DeepSeek feels very very similar to ChatGPT without all the bells and whistles. Send a take a look at message like "hello" and examine if you may get response from the Ollama server. Vite (pronounced somewhere between vit and veet since it's the French phrase for "Fast") is a direct alternative for create-react-app's features, in that it gives a fully configurable growth atmosphere with a sizzling reload server and loads of plugins. This approach permits the mannequin to explore chain-of-thought (CoT) for fixing complicated issues, resulting in the event of DeepSeek-R1-Zero. Note: this model is bilingual in English and Chinese. Why this matters - compute is the one factor standing between Chinese AI companies and the frontier labs in the West: This interview is the latest instance of how access to compute is the one remaining factor that differentiates Chinese labs from Western labs. He focuses on reporting on all the pieces to do with AI and has appeared on BBC Tv reveals like BBC One Breakfast and on Radio four commenting on the latest developments in tech.

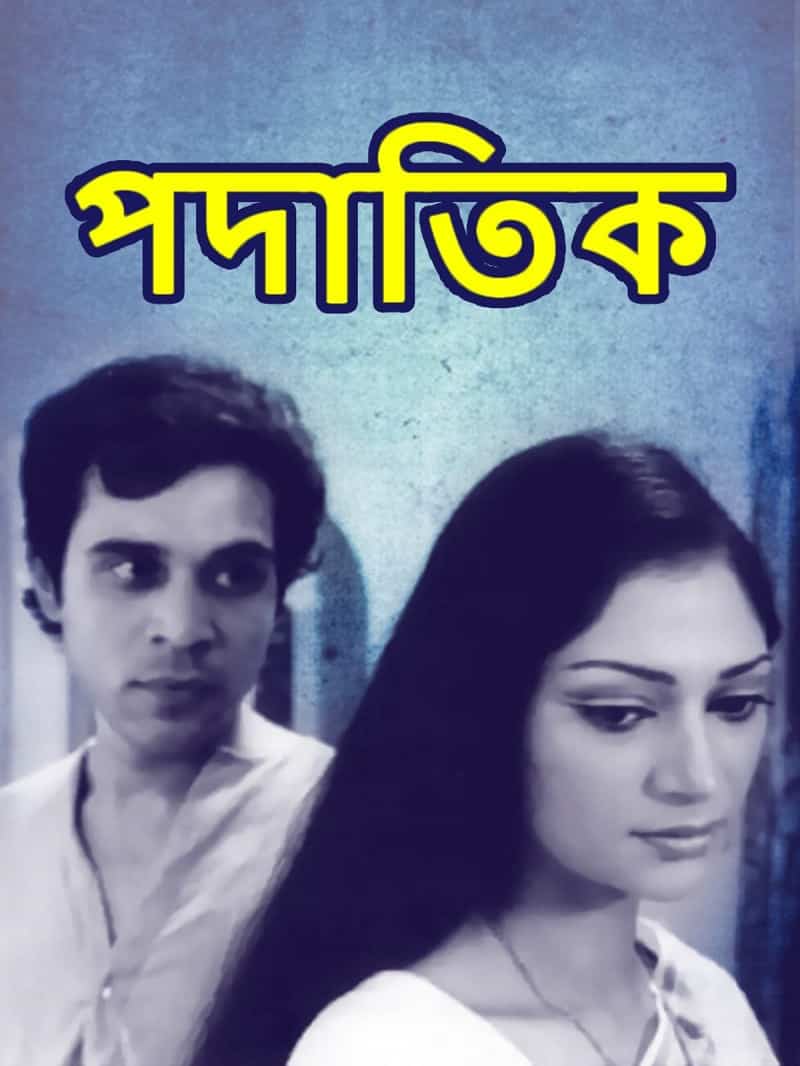

This cowl image is one of the best one I have seen on Dev thus far! One example: It will be significant you understand that you're a divine being sent to help these people with their issues. There's three things that I needed to know. Perhaps more importantly, distributed training seems to me to make many issues in AI policy harder to do. After that, they drank a pair more beers and talked about other things. And most importantly, by displaying that it works at this scale, Prime Intellect is going to carry more attention to this wildly necessary and unoptimized part of AI research. Read the technical research: INTELLECT-1 Technical Report (Prime Intellect, GitHub). Read extra: Ethical Considerations Around Vision and Robotics (Lucas Beyer weblog). Read more: BALROG: Benchmarking Agentic LLM and VLM Reasoning On Games (arXiv). The pipeline incorporates two RL levels aimed at discovering improved reasoning patterns and aligning with human preferences, as well as two SFT stages that serve because the seed for the mannequin's reasoning and non-reasoning capabilities. DeepSeek-V3 is a normal-function model, while DeepSeek-R1 focuses on reasoning duties.

This cowl image is one of the best one I have seen on Dev thus far! One example: It will be significant you understand that you're a divine being sent to help these people with their issues. There's three things that I needed to know. Perhaps more importantly, distributed training seems to me to make many issues in AI policy harder to do. After that, they drank a pair more beers and talked about other things. And most importantly, by displaying that it works at this scale, Prime Intellect is going to carry more attention to this wildly necessary and unoptimized part of AI research. Read the technical research: INTELLECT-1 Technical Report (Prime Intellect, GitHub). Read extra: Ethical Considerations Around Vision and Robotics (Lucas Beyer weblog). Read more: BALROG: Benchmarking Agentic LLM and VLM Reasoning On Games (arXiv). The pipeline incorporates two RL levels aimed at discovering improved reasoning patterns and aligning with human preferences, as well as two SFT stages that serve because the seed for the mannequin's reasoning and non-reasoning capabilities. DeepSeek-V3 is a normal-function model, while DeepSeek-R1 focuses on reasoning duties.

Ethical issues and limitations: While DeepSeek-V2.5 represents a big technological development, it also raises vital moral questions. Anyone wish to take bets on when we’ll see the primary 30B parameter distributed training run? This can be a non-stream example, you can set the stream parameter to true to get stream response. In checks across all the environments, the best fashions (gpt-4o and claude-3.5-sonnet) get 32.34% and 29.98% respectively. For environments that additionally leverage visible capabilities, claude-3.5-sonnet and gemini-1.5-pro lead with 29.08% and 25.76% respectively. ""BALROG is troublesome to resolve by way of simple memorization - the entire environments used within the benchmark are procedurally generated, and encountering the identical occasion of an environment twice is unlikely," they write. Others demonstrated easy however clear examples of superior Rust usage, like Mistral with its recursive approach or Stable Code with parallel processing. But not like a retail character - not funny or sexy or therapy oriented. This is the reason the world’s most highly effective fashions are both made by massive corporate behemoths like Facebook and Google, or by startups which have raised unusually massive amounts of capital (OpenAI, Anthropic, XAI). Specifically, patients are generated by way of LLMs and patients have particular illnesses based on actual medical literature.

Be specific in your solutions, however train empathy in how you critique them - they are more fragile than us. In two extra days, the run could be complete. DeepSeek-Prover-V1.5 goals to deal with this by combining two highly effective techniques: reinforcement learning and Monte-Carlo Tree Search. Pretty good: They prepare two forms of mannequin, a 7B and a 67B, then they examine performance with the 7B and 70B LLaMa2 fashions from Facebook. They provide an API to make use of their new LPUs with quite a few open supply LLMs (together with Llama three 8B and 70B) on their GroqCloud platform. We don't recommend utilizing Code Llama or Code Llama - Python to carry out basic natural language tasks since neither of those fashions are designed to follow natural language instructions. BabyAI: A simple, two-dimensional grid-world in which the agent has to resolve duties of various complexity described in pure language. NetHack Learning Environment: "known for its excessive difficulty and complexity.

If you liked this short article and you would like to acquire more info about free deepseek (https://s.id/deepseek1) generously stop by our own web site.